Introduction

Integrating advanced agentic AI into established enterprise environments is one of the most important technical and strategic initiatives for organizations in 2025. The goal is to unlock automation, decision intelligence, and process acceleration while preserving the reliability and security of existing on premise systems and databases. This post outlines a practical, phased approach to agentic AI integration with legacy systems that emphasizes middleware choices, data architecture, security controls, and a Q3 migration timeline that minimizes downtime.

We focus on actionable guidance for engineering leaders, architects, and program managers who need to balance innovation with operational continuity. You will find a clear migration sequence, middleware patterns that reduce risk, and testing and rollback strategies that keep business services available. Real world constraints such as limited maintenance windows, compliance boundaries, and complex data formats are addressed so you can plan an incremental yet decisive path to production. The approach described here treats agentic AI integration with legacy systems as a program of change rather than a single project, enabling repeatable outcomes and measurable value delivery.

Why agentic AI integration with legacy systems matters now

Agentic AI brings autonomous agents that can orchestrate tasks, make decisions, and interact with systems and people across enterprise boundaries. When these agents are connected to legacy systems they can dramatically improve throughput, reduce manual work, and surface insights from previously siloed data. Yet legacy systems were rarely designed for real time programmatic interaction. Systems are often on premise, use proprietary protocols, and enforce rigid schemas that complicate direct integration. A deliberate integration strategy is therefore required to avoid degradation of critical services and to preserve data integrity during the transition.

Organizations need to treat agentic AI integration with legacy systems as both a technical challenge and an organizational change exercise. The integration succeeds when agents augment human workflows without disrupting service level agreements. That requires careful prioritization of use cases, a layered architecture that isolates risk, and strong observability into both the legacy and new agentic layers. Companies that have achieved this integration report faster cycle times for tasks, better decision quality powered by real time context, and higher user satisfaction from reduced friction in workflows.

Key business benefits

Operational acceleration through automation of repetitive tasks and orchestration across systems.

Improved data utilization when agentic AI can read, reconcile, and act on data in legacy stores.

Risk reduction by embedding validation and business rules in middleware rather than changing critical systems directly.

Faster innovation because agents can be iterated independently and layered on top of the legacy stack.

Successfully delivering these benefits depends on a repeatable integration pattern that minimizes change to legacy environments while enabling agentic AI systems to perform securely and reliably. Below we walk through the core integration approaches and middleware options that make that pattern practical.

Integration approaches and middleware options

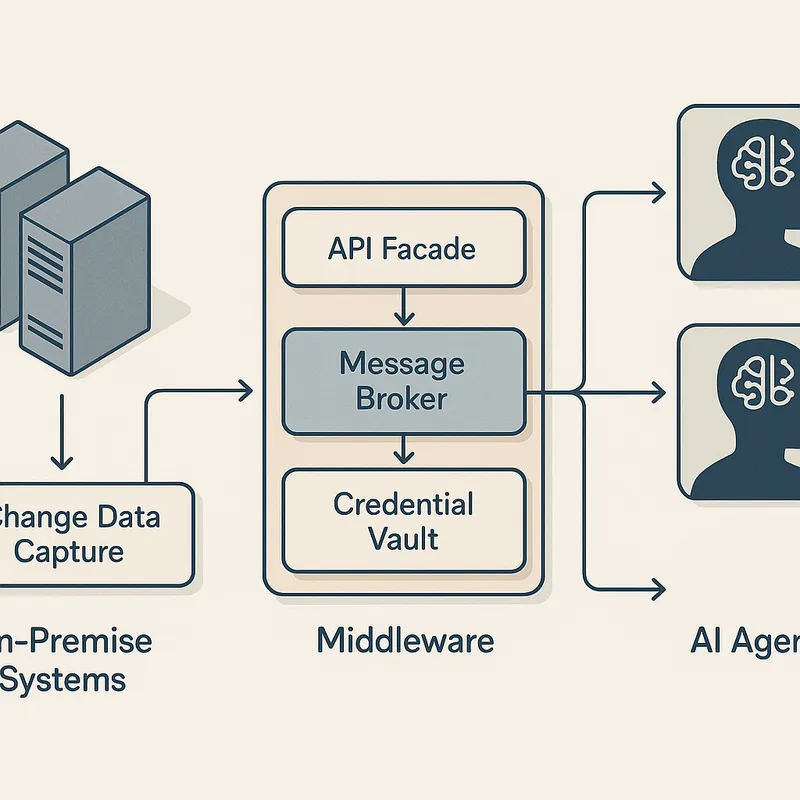

There are three primary integration approaches to connect agentic AI to legacy databases and on premise systems: non invasive middleware, API medallion or facade patterns, and hybrid connectors with controlled direct access. Each has trade offs in latency, development effort, and operational risk. Choosing the right pattern depends on the use case urgency, compliance constraints, and the maturity of existing IT processes. Across all patterns, middleware acts as the buffer that enables agentic AI integration with legacy systems without introducing instability.

Non invasive middleware is often the safest first step. This approach uses adapters that poll or receive events from legacy systems, transforms data to canonical structures, and exposes secure interfaces to agentic layers. Because it avoids altering the legacy codebase, it minimizes the chance of breaking production. Non invasive middleware is ideal for agentic AI pilots that need contextual data but cannot tolerate any interruption to business processes.

The API medallion or facade pattern wraps legacy functionality with a controlled API layer. This pattern standardizes interaction and enforces contracts, enabling agentic agents to call well defined endpoints. A facade reduces the mental overhead for agent design because it presents consistent semantics across disparate systems. It also enables throttling, authentication, and schema evolution without touching the underlying system. Implement facades when teams can coordinate limited changes or when a gateway provides protocol translation on behalf of the legacy system.

Hybrid connectors and secure direct access

Hybrid connectors provide direct but gated access to legacy systems where read or write latency is critical. This approach uses secure tunnels, credential vaulting, and immutable transaction logging to satisfy audit and compliance requirements. Hybrid connectors are appropriate when agentic AI requires low latency reads or must execute near real time operations such as order routing or inventory adjustments. Implement these connectors with strong circuit breakers and fallbacks so that if the agentic layer fails or misbehaves the legacy system remains stable.

Middleware selection is a decisive factor for minimizing downtime during migration. Consider using message brokers, change data capture platforms, and lightweight orchestration layers to decouple agents from legacy endpoints. Design middleware to be observable, idempotent, and testable so you can incrementally validate behavior. Consistent interface contracts and a robust schema registry will help maintain compatibility as both agentic and legacy components evolve.

Data architecture, mapping, and security for safe connection

Data is the lifeblood of agentic AI usage. Connecting agents to legacy databases requires a thoughtfully designed data architecture that ensures data quality, lineage, and privacy. Before any live agent has write capability, build a read only layer that supplies sanitized context, derived features, and precomputed summaries. This pattern reduces the surface area for accidental writes and provides a controlled way to validate agent decisions against historical outcomes.

Data mapping is critical when different systems use conflicting identifiers and inconsistent taxonomies. Use canonical data models to normalize formats and to centralize reconciliation logic in middleware. Implement transformation pipelines that preserve original timestamps and provenance metadata so that any decision by an agent can be traced to the original records. This provenance supports auditability and enables post event analysis when something unusual happens.

Security is non negotiable. Agentic AI integration with legacy systems must follow least privilege principles, enforce role based access controls, and use secure credential management systems. Network segmentation will often be required for on premise systems. Apply strict ingress and egress controls and use data masking or tokenization for sensitive attributes. Where regulations require, ensure that data stays within geographic boundaries and that encryption keys are managed with an auditable key lifecycle.

Operational controls and monitoring

Operational readiness means deploying observability across the entire data path. Implement metrics for latency, error rates, and throughput between agents and legacy endpoints. Create synthetic transactions that mirror critical workflows so you detect regressions before they affect customers. For write actions initiated by agents, require a staged approval or canary rollout that escalates to automatic remediation if thresholds are exceeded. These controls reduce the risk that agentic AI integration with legacy systems will produce disruptive outcomes.

Another useful control is a decision sandbox. Route agent decisions to a shadow environment where the decision is scored against expected outcomes without changing production data. Shadowing enables teams to assess agent accuracy and impact, to tune models and rules, and to build confidence for live action. Use the results from shadow deployments to refine mapping logic, retry strategies, and compensating transactions.

Q3 migration timeline that minimizes downtime

Planning a migration in Q3 requires aligning stakeholders, securing maintenance windows, and defining clear rollback criteria. The timeline below covers a typical 12 week Q3 migration window that can be compressed or extended depending on system complexity. The schedule emphasizes incremental validation and time boxed canary releases so that downtime is minimized and recoverability is guaranteed.

Weeks 1 to 2: Preparation and discovery. Inventory systems, data stores, and interfaces. Identify critical paths and define success metrics. Create a separation plan that explicitly lists what will change in the legacy environment and what will remain untouched. Assign owners for each integration touchpoint and establish communication channels for incident escalation.

Weeks 3 to 4: Middleware deployment and interface stabilization. Deploy non invasive middleware in parallel with existing systems. Implement adapters for each legacy endpoint and validate schema translations using production like data sets. Configure security, logging, and throttling. Run automated contract tests so that the middleware consistently produces the expected outputs and handles malformed messages gracefully.

Weeks 5 to 8: Agentic AI shadowing and iterative tuning

Deploy agentic AI in shadow mode where agents receive real inputs and produce outputs that are logged and compared against accepted baselines. This phase allows teams to measure performance, identify edge cases, and refine orchestration logic. Use canary analysis to compare derived business metrics. If discrepancies exceed predefined thresholds, tune models and mapping logic before proceeding to limited live actions. Maintain a rollback path that can halt agent write capabilities instantly.

Weeks 9 to 10: Controlled live pilot with limited scope. Shift a small portion of traffic to agentic decisioning while maintaining human oversight or an approval gate. Monitor system metrics and business key performance indicators closely. Implement compensating transactions so that if a live action is later found to be problematic it can be reversed reliably. Have a hot rollback plan that disables the agent channel and routes traffic back to the legacy process without data loss.

Weeks 11 to 12: Gradual ramp and production handover. If pilots meet success criteria, expand agent action scope in measured increments. Continue monitoring and conduct a formal post launch review. Capture lessons learned and update runbooks so operations can maintain the integrated estate. Decommission any temporary adapters only after confirmed stability. This phased approach preserves availability while enabling agentic AI integration with legacy systems to deliver measurable value.

Testing, validation, and rollback strategies

Testing is the foundation for reducing risk during agentic AI integration with legacy systems. Start with unit and contract tests for each adapter and facade. Contract tests check that middleware behaves consistently regardless of upstream changes. Integration tests should use anonymized production data when possible so that mappings and transformations are evaluated against real patterns. End to end tests must include the agentic decision logic, middleware, and legacy endpoints to validate full flow behavior.

Validation often requires multiple environments. Use a production mirror for high fidelity testing and a lower fidelity environment for rapid iteration. Important tests include idempotence checks for write operations, concurrency stress tests for peak load, and failure mode injection where network partitions or authentication errors are simulated. Observability during tests is key so that root cause analysis is possible when outcomes diverge from expectations.

Rollback strategies should be planned and rehearsed. For read only integrations, rollback may be trivial. For writes, build compensating transaction mechanisms that are deterministic, atomic where possible, and auditable. Maintain a timeline of record versions and provenance to support safe backout. In addition to automated rollback, maintain an operational war room staffed with engineers who can execute manual scripts when unforeseen scenarios occur. Clear decision authorities and escalation paths reduce response time during incidents.

Operational readiness checklist

Contract and integration tests passed across environments

Shadowing metrics meet acceptance criteria for accuracy and latency

Security audits completed and access controls validated

Compensating transactions and rollbacks implemented and tested

Runbooks and contact lists prepared for rapid incident response

Governance, auditing, and performance monitoring

Governance ensures that agentic AI integration with legacy systems is compliant, transparent, and aligned with business policy. Establish an integration governance board that includes legal, compliance, architecture, and operations representatives. The board approves use cases, review risk assessments, and sets thresholds for automated remediation. Maintain a change log for all interface and middleware updates so audits can trace when and why changes were made.

Auditing requires robust provenance capture. Log both inputs and outputs for agentic actions in a tamper evident store. Retain contextual metadata such as model version, decision rationale, and timestamps to support retrospective analysis. Ensure logs are accessible to compliance teams and that retention policies meet regulatory requirements. Where sensitive data is involved, store only pseudonymized records in long term logs and maintain linkage with secure mapping tables where needed for investigation.

Performance monitoring must extend beyond traditional infrastructure metrics. Include application level indicators such as decision accuracy, mean time to recover from failed actions, and business metrics that agents influence. Build dashboards that combine system telemetry with business outcomes so that operators can see the impact of integration on customer experience and revenue. Automate alerts for anomalies and create playbooks that guide operational responses to typical failures.

Cost, resourcing, and change management

Budget planning for agentic AI integration with legacy systems should include licensing for middleware, engineering effort, security tooling, and operational monitoring. Also account for training and change management. Teams that use agents will need clear guidance on when to trust automation and when to require human review. Training sessions and updated process documentation reduce friction at adoption points.

Staffing requires cross functional skills. You will need integration engineers, data engineers, security specialists, and site reliability engineers. Consider pairing legacy system experts with AI developers to accelerate knowledge transfer. For organizations with limited internal capacity, a phased approach that prioritizes high ROI use cases reduces the burden while delivering value early.

Change management is a people problem as much as a technical problem. Define clear ownership for agentic actions and ensure that role responsibilities are updated. Communicate transparently with business units about what will change operationally and what safeguards are in place. Provide a feedback loop where business users can report anomalies and request adjustments to agent behavior. This builds trust and accelerates acceptance.

Long term considerations and future proofing

After the initial migration, plan for continuous improvement. Agentic AI integration with legacy systems should not be a one time event. As agents learn and models improve, update policies for redeployment and validation. Maintain a model registry and a schema registry so that changes are coordinated and can be rolled back if they produce adverse results. This governance lowers the cost of iteration and maintains system stability.

Architect for modularity. Keep agent logic decoupled from backend specifics so that as legacy systems are modernized they can be swapped with minimal disruption. Standardized interfaces, well documented contracts, and self contained adapters make it possible to replace a backend with a modern service while leaving agent orchestration intact. This approach protects your investment in agent development and allows teams to focus on business problems rather than plumbing.

Invest in observability and feedback mechanisms that tie AI performance to business outcomes. Use continuous evaluation frameworks that monitor fairness, accuracy, and drift. Schedule regular audits of data pipelines, feature derivation, and decision logging. Over time you will learn which integrations provide durable value and which need refactoring or retirement. Treat integration as an ongoing program under product management discipline, with measurable OKRs and review cadences.

Conclusion

Integrating agentic AI into legacy databases and on premise systems presents an opportunity to accelerate operations and improve decision making without compromising the stability of critical infrastructure. A successful program focuses on risk reduction, incremental delivery, and strong operational controls. Use non invasive middleware or facade patterns to decouple agents from fragile subsystems. Where necessary, implement hybrid connectors with guarded direct access and circuit breakers to maintain availability. Prioritize data mapping, provenance, and security so that agentic AI integration with legacy systems remains auditable and compliant.

A Q3 migration timeline that minimizes downtime is achievable with disciplined preparation. Start with discovery and inventory, deploy middleware for safe interfacing, run agents in shadow mode for validation, and progress through controlled pilots before full ramp. Throughout the migration maintain a culture of observability and test extensively across environments. Compensating transactions, canary releases, and rollback rehearsals are essential to preserving business continuity. Build runbooks and rehearse incident responses so that teams can react quickly if unexpected behavior occurs.

Governance and change management are equally important. Form an integration governance board to approve high risk actions and to ensure logging and provenance meet audit requirements. Capture decision context and model metadata to enable post event investigations and to support regulatory inquiries. Provide training and transparent communication to stakeholders so that users understand what automation does, why it helps, and how to escalate issues. This fosters trust and accelerates adoption.

Long term value comes from treating agentic AI integration with legacy systems as a continuous program rather than a one off engineering task. Maintain registries for models and schemas, invest in modular adapters, and continually measure AI impact against business outcomes. By combining careful middleware design, incremental rollout, robust security, and strong governance you can unlock the advantages of agentic AI while keeping legacy systems stable and available. The result is a pragmatic path to modernization that delivers measurable benefits, preserves operational resilience, and positions the organization to iterate faster in future transformation cycles.

To get started, map a pilot use case that has clear business impact yet low risk to critical services. Build the simplest middleware adapter that exposes the required context, run agentic AI in shadow mode, and validate outcomes against baseline metrics. Iterate in short cycles and expand scope only after meeting predefined acceptance criteria. With the right planning and execution your team can integrate agentic AI into legacy environments with minimal disruption and sustainable long term improvements.