Agentic AI is moving beyond research labs into production systems that automate decision making and multi step workflows. As organizations deploy autonomous agents to handle customer service, IT operations, and business processes they face a new set of governance, access control, audit and compliance challenges. Clear guidance on agentic AI security and compliance helps teams design systems that are resilient, accountable, and auditable while preserving the speed and automation benefits agents deliver. This post provides service focused, practical guidance for security architects, compliance owners, and engineering teams responsible for agent deployments. Learn more in our post on Market Map: Top Agentic AI Platforms and Where A.I. PRIME Fits (August 2025 Update).

You will find actionable controls for governance frameworks, identity and access management, telemetry and audit trails, and regulatory mapping. The guidance balances technical controls with operational practices so teams can operationalize agentic AI security and compliance across the development lifecycle and into continuous operation. Where possible the recommendations are vendor neutral and aimed at enabling safe, compliant scaling of autonomous agents in production.

Governance and Policy Foundations

Strong governance is the foundation of any program that must meet agentic AI security and compliance objectives. Governance defines the rules that shape how autonomous agents are designed, trained, tested, deployed, and retired. Without governance, technical controls are applied inconsistently and gaps emerge between what is allowed and what happens in production. A governance program should align with organizational risk appetite and the regulatory obligations that apply to the data and decisions agents will touch. Learn more in our post on Augmented Ops: How Agentic AI and Human Teams Should Share Decision Rights in 2025.

Start by defining an agent policy that covers roles, responsibilities, and decision boundaries. The policy should answer core questions such as which business units can request agents, what problem classes are acceptable for automation, how human supervision is required, and under what conditions agents may act without human approval. An explicit policy reduces ambiguity and creates a repeatable approval path for new agents.

Establish a governance board or working group that includes representatives from security, compliance, legal, product, and engineering. This multi discipline group should review agent proposals, approve risk mitigations, and sign off on monitoring plans prior to deployment. The board should maintain a register of active agents, their owners, purpose, risk classification, and the controls in place. Treat the register as a living inventory to support audits and investigations.

Integrate governance with the development lifecycle. Require a standardized security and compliance checklist as part of the design and release documentation. Use automated policy gates in CI pipelines where feasible so compliance checks run on each code commit or model update. By embedding checks early you reduce costly rework and ensure that agentic AI security and compliance are considered at every phase.

Risk Classification and Impact Assessment

Not all agents are equal. A risk classification framework helps prioritize controls and monitoring investment. Classify agents by impact categories such as low, medium, or high based on factors like access to sensitive data, authority to take financial actions, or potential to materially affect customers. For each category define minimum standards for testing, approvals, and oversight.

Conduct a privacy and security impact assessment for each agent. The assessment should document data flows, external integrations, potential for data exfiltration, and failure scenarios. For high impact agents require a red team review or tabletop exercise to simulate misuse and identify control weaknesses. These assessments form evidence for compliance reviews and help demonstrate due diligence to regulators.

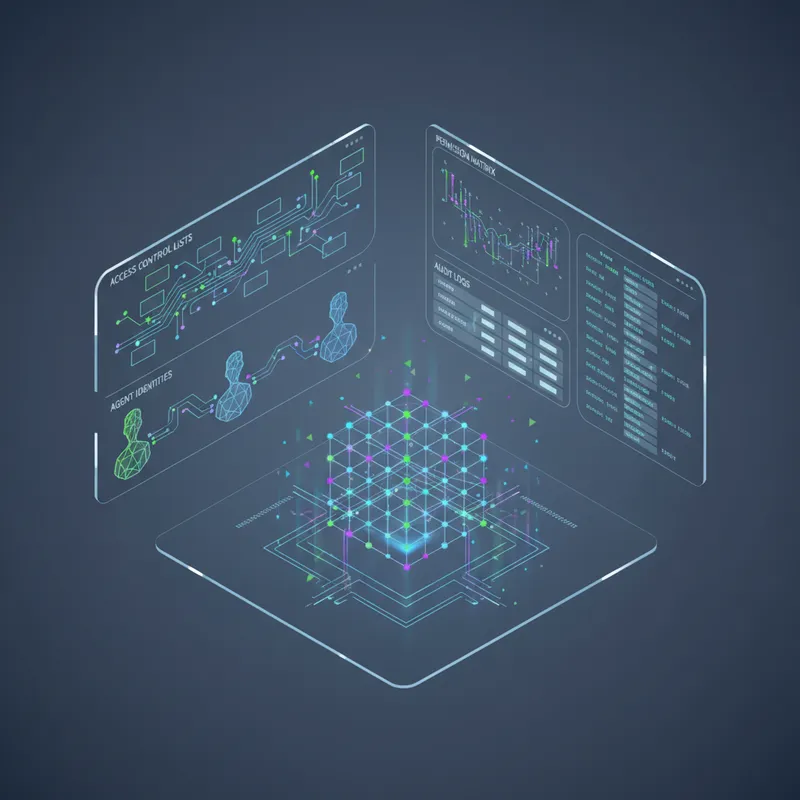

Access Controls and Identity Management

Access control is central to agentic AI security and compliance because agents often act as automated identities that interact with systems, APIs, and data stores. Treat agents as first class identities with lifecycle managed credentials, least privilege access, and auditable actions. Avoid treating agents as simple service accounts with broad privileges. Learn more in our post on Sustainability and Efficiency: Environmental Benefits of Automated Workflows.

Use unique identities per agent instance and apply role based access control or attribute based access control to limit what each agent can do. Where possible use short lived credentials issued by a secure identity provider. Short lived credentials reduce the window of risk if an agent or its host is compromised. Ensure credential issuance is tied to the agent lifecycle so credentials are revoked automatically when the agent is retired.

Implement least privilege by scoping permissions narrowly. Instead of granting broad database or cloud roles give agents access only to the specific tables, queues, or API endpoints required to perform their tasks. Combine permission scoping with just in time elevation for rare operations where a human must temporarily increase agent privileges. Just in time processes should require approval and produce audit logs to support compliance.

Authentication and Agent Provenance

Strong authentication for agents includes mutual TLS where appropriate or token based authentication with robust rotation and revocation. Maintain provenance metadata that records agent lineage such as model version, training dataset snapshot, and code commit hash. Provenance supports forensic analysis, liability assessment, and regulatory reporting about how decisions were made.

Store provenance and configuration data in an immutable store or append only log so changes are preserved and traceable. When an agent performs an externally visible action include provenance identifiers in the request metadata. That practice helps trace decisions back to a specific agent build during post incident analysis and audits that assess agentic AI security and compliance.

Auditability, Monitoring, and Observability

Auditability is a repeatable requirement across most regulatory frameworks and is critical for demonstrating agentic AI security and compliance. Design agents to emit structured, contextual logs that capture inputs, outputs, confidence scores, decision rationale where available, and provenance identifiers. Logs must be tamper resistant and retained according to retention policies aligned with legal and regulatory obligations.

Implement layered monitoring to detect both technical failures and behavioral drift in agent performance. Technical monitoring includes health checks, latency, error rates, and resource usage. Behavioral monitoring focuses on distribution shifts in inputs, changes in output patterns, unusual authorization requests, and deviations from expected decision outcomes.

Use anomaly detection and rule based alerts to surface suspicious agent behavior. Combine automated alerts with human review processes so that potential incidents are investigated quickly. Maintain a runbook that outlines steps to isolate, rollback, or quarantine an agent when anomalies are detected. A documented runbook demonstrates operational readiness in support of agentic AI security and compliance audits.

Forensic Readiness and Evidence Collection

Prepare for investigations by collecting the right evidence. Ensure logs are centralized, encrypted at rest, and discoverable. Capture request and response payloads when allowed by privacy rules, and store derived artifacts such as decision traces and intermediate states. For high impact agents consider snapshotting the model state and environment variables at the time of each decision to rebuild the context of an event.

Define retention windows tied to legal hold processes so evidence can be preserved in response to regulatory requests. Test forensic procedures periodically by running simulated incidents to validate that investigators can reconstruct events from available telemetry. Demonstrating that you can rapidly produce reliable evidence strengthens your case for compliance.

Data Governance and Privacy Controls

Agents often handle or infer sensitive data. Data governance policies should specify what data agents are allowed to access, how data is masked or minimized, and how long data can be retained. A data minimization strategy reduces exposure by limiting the datasets that agents can use and by restricting training data to what is necessary to perform the task.

Apply data protection techniques such as tokenization, anonymization, and selective redaction when agents must process sensitive fields. When redaction is not possible use strict access controls and logging combined with encryption in transit and at rest. For personally identifiable information require explicit authorization and clear accountability for any processing performed by an agent.

Consider synthetic data for development and testing environments to avoid exposing production sensitive data during training or evaluation. Where models are trained on live data, document consent and lawful basis for processing. Records of processing and consent management are essential artifacts for regulators assessing agentic AI security and compliance.

Model Management and Safe Updates

Model updates are a frequent source of drift and unintended behavior. Implement a model governance process that includes versioning, validation, and controlled rollout. Use canary deployments for new models, monitor for degradation or unwanted behaviors, and have rollback mechanisms that are fast and reliable.

Before deploying model updates require automated tests, safety checks, and human review for high impact agents. Include regression tests that validate decision boundaries and scenarios that historically caused issues. Maintain audit trails that link model versions to the test results and approvals that authorized the release. These practices create the evidence you need to demonstrate agentic AI security and compliance.

Regulatory Mapping and Controls

Understanding which laws and regulations apply is a practical requirement for agentic AI security and compliance. Regulations may be sector specific or cross border and they can include privacy rules, financial controls, consumer protection, and sector specific safety standards. Conduct a regulatory mapping exercise that lists applicable rules, required controls, and reporting obligations.

For each applicable regulation identify control objectives and metrics you need to demonstrate compliance. Translate legal requirements into technical controls and operational processes. For example if a regulation requires explainability for certain automated decisions define how you will capture decision rationale and how you will present that rationale to auditors or affected individuals.

Assign accountability for regulatory obligations to business units or owners who can respond to audits and enforcement inquiries. Maintain documented evidence packages that include architecture diagrams, data flow maps, policy documents, risk assessments, testing records, and incident logs. A consistent evidence package accelerates audit responses and shows proactive governance of agentic AI security and compliance.

Cross Border Data Flows and Third Party Risk

Agents that integrate external services or process data across jurisdictions create additional complexity. Map all third party integrations and the jurisdictions where data is processed. Ensure contractual protections and data transfer mechanisms are in place for cross border flows. Where vendors are involved require them to meet your baseline security and privacy requirements and to provide transparency into their controls.

Perform periodic third party risk assessments that examine vendor security posture, incident history, and compliance certifications. For high risk third parties include penetration testing and code reviews as part of due diligence. Document remediation plans and monitor vendor compliance over time as part of your agentic AI security and compliance program.

Operational Controls and Incident Response

Operationalize your controls so security and compliance are not one time checkboxes but ongoing practices. Define operational roles including agent owners, security stewards, and escalation contacts. Automation can help by enforcing policy gates, rotating credentials, and collecting telemetry. However automation must itself be subject to oversight and review.

Develop incident response plans tailored to agent related incidents. Agent incidents can include runaway automation, data leaks, unauthorized privilege escalation, or harmful decisions impacting customers. Incident scenarios should include steps to contain, mitigate, and recover from agent incidents along with communication templates for internal stakeholders and external regulators when required.

Practice incident response with tabletop exercises that simulate agent failures and the regulatory reporting that might follow. Exercises help teams rehearse decision making, improve runbooks, and identify gaps in evidence collection. Well practiced teams can reduce the impact of an incident and demonstrate readiness to regulators evaluating your agentic AI security and compliance posture.

Change Management and Continuous Improvement

Change management is vital because agents continuously evolve. Require formal change requests for significant agent behavior changes, environment updates, or integration modifications. Link change approvals to testing artifacts and monitoring plans to ensure changes are safe to deploy.

Adopt a continuous improvement cycle that uses metrics and post incident reviews to refine controls. Track operational metrics such as false positive rates, incident frequency, time to detect, and time to remediate. Use these metrics to prioritize control investments and to demonstrate measurable progress for agentic AI security and compliance.

Technical Hardening and Runtime Protections

Technical hardening reduces the attack surface for agents. Harden the host environments, container runtimes, and orchestration platforms that run agents. Use platform level controls such as sandboxing, resource limits, and network segmentation to limit lateral movement if an agent is compromised. Runtime protections like syscall filtering and container immutability reduce the ability for malicious code to persist.

Consider using policy enforcement engines that can filter or constrain agent outputs before they reach external systems. For example implement output validation layers that check decisions for policy violations and escalate or block actions that would violate rules. These control layers provide last mile protection and can be tightly coupled with monitoring to detect attempted policy breaches.

Encrypt sensitive data in use where possible using technologies like secure enclaves or trusted execution environments for high assurance scenarios. Combine encryption with strict key management that includes rotation, split knowledge, and separation of duties. These measures strengthen the confidentiality and integrity of sensitive data handled by agents and support compliance requirements.

Testing, Red Teaming, and Validation

Testing should include adversarial scenarios as part of agent validation. Carry out threat modeling and red team exercises targeted at agent decision logic, integrations, and data flows. Simulate abuse cases such as prompt injection, data poisoning, and unauthorized API calls to evaluate how well controls hold up under attack.

Validate that mitigation controls such as input sanitization, output filtering, and access governance effectively reduce risk without causing operational failures. Record test results and remediation actions as part of your agentic AI security and compliance evidence. Repeat testing on model updates and infrastructure changes so you maintain consistent assurance.

Deployment Checklist and Practical Steps

This checklist helps ensure that deployments meet agentic AI security and compliance requirements. Use it as a gate before any agent reaches production.

Policy alignment: Confirm the proposed agent aligns with organizational policy and has board approval where required.

Risk assessment: Complete a privacy and security impact assessment and classify the agent by impact level.

Identity and access: Provision unique identities, enforce least privilege, and enable short lived credentials.

Provenance: Record model version, data snapshots, and code hashes in an immutable store.

Testing: Execute functional, safety, and adversarial tests including red team scenarios.

Monitoring: Instrument structured logging, anomaly detection, and alerting for behavioral drift.

Data protections: Apply minimization, redaction, and encryption policies. Document consent where applicable.

Runbooks: Publish incident response plans and runbooks for containment and rollback.

Compliance evidence: Collect and store artifacts such as assessments, approvals, and test results for audits.

Post deployment: Schedule periodic reviews, rehearse incident responses, and track operational metrics.

Following a consistent checklist reduces surprises during deployments and ensures you have demonstrable controls in place for agentic AI security and compliance reviews.

Training, Culture, and Organizational Change

Technical controls matter but culture and training determine whether you can scale secure and compliant agent deployments. Train engineers and operators on the specific risks posed by autonomous agents including prompt injection, model drift, and automation runaway scenarios. Security awareness for product managers and business stakeholders ensures that control requirements are understood and funded.

Encourage a blameless reporting culture so that issues discovered in development or production are reported early. Incentivize secure design through performance metrics and by recognizing teams that consistently meet agentic AI security and compliance standards. Cross functional exercises such as purple team reviews that pair security and engineering provide practical knowledge transfer and help operationalize controls.

Provide clear documentation and templates for common artifacts such as privacy impact assessments, model cards, and security checklists. Make resources available in developer portals and integrate guidance into tooling to lower friction. When teams have straightforward templates and automated checks they are more likely to comply with agentic AI security and compliance practices.

Conclusion

Agentic AI offers powerful automation capabilities that can transform business operations but it also introduces nuanced risks that span governance, access control, auditability, and regulatory compliance. Achieving strong agentic AI security and compliance requires a programmatic approach that blends policy, engineering discipline, and operational rigor. Organizations must treat agents as first class entities with managed identities, provable provenance, and structured telemetry. They must also implement governance processes that classify risk, authorize deployment, and maintain an up to date inventory of active agents.

Technical controls such as least privilege, short lived credentials, sandboxing, and runtime output filtering reduce attack surface and limit the blast radius when issues occur. Equally important are monitoring and observability practices that capture contextual logs, support anomaly detection, and enable forensic reconstruction. These practices help teams detect sabotage or misuse and provide evidence for audits and regulatory responses. Model governance processes, including versioning, testing, and canary rollouts, are essential to prevent drift and regressions that could undermine compliance efforts.

Operationalizing agentic AI security and compliance also demands investment in people and culture. Cross functional governance bodies, role based responsibilities, and rehearsed incident response plans accelerate detection and remediation. Continuous training and developer friendly templates lower the barrier to compliance while tabletop exercises validate readiness. For third party components and cross border data flows organizations need contractual controls, vendor assessments, and documented data transfer mechanisms to manage external risk.

Finally, make compliance evidence a priority by maintaining immutable records of approvals, test results, decision rationale, and incident investigations. An auditable evidence trail reduces audit friction and shows regulators that you are actively managing risks. By combining governance, identity management, observability, data protection, and organizational readiness you can deploy agents that deliver automation benefits while meeting legal and ethical obligations. With consistent practice and continuous improvement you will build a resilient program that satisfies stakeholders and scales safely.