Organizations that want to move from experimentation to impact need a clear, time-boxed approach. This Rapid Deployment Kit outlines an agentic AI deployment plan that takes teams from pilot to scaled production in 30, 60, and 90 day timeboxes. The goal is to reduce time to value while limiting operational and compliance risk. This plan is practical, repeatable, and tailored to enterprise constraints so leaders can align stakeholders, secure the right data and infrastructure, and demonstrate measurable outcomes quickly. Learn more in our post on Custom Integrations: Connect Agentic AI to Legacy Systems Without Disruption.

The agentic AI deployment plan described here provides governance guardrails, technical patterns, and change management guidance. It distills common mistakes into avoidable steps and emphasizes rapid iteration, safe testing, and clear success metrics. This approach is ideal for teams that need a packaged rollout that balances velocity and control so that investment in agents delivers predictable outcomes. Read on for a structured 30, 60, 90 day blueprint, supporting operating model recommendations, practical checklists, and templates for measuring success.

Why a Time-Boxed Agentic AI Deployment Plan Works

Deploying agentic AI without a time-boxed method often leads to scope creep, unclear return on investment, and unmanaged risks. A 30–60–90 day agentic AI deployment plan forces decisions and aligns priorities. Time-boxing creates momentum by focusing work on defined outcomes, which reduces indecision and accelerates stakeholder buy in. It also creates natural review points where teams can validate assumptions and either scale successes or pause and pivot with minimal sunk cost. Learn more in our post on Future of Work Q3 2025: Agentic AI as the New Operations Layer.

From a governance perspective, a structured plan allows risk teams to assess safety, privacy, and compliance early in the process. It establishes data use agreements and logging requirements before agents operate on production data. From a technical perspective, the plan clarifies integration boundaries, monitoring requirements, and rollback procedures so that experiments do not expose the broader environment. From a business perspective, it prioritizes use cases that generate measurable value and reduces time to value by focusing on outcomes rather than features.

Operationalizing agentic AI requires both engineering and organizational readiness. The agentic AI deployment plan makes those dependencies explicit. It prescribes roles, sprint goals, and review gates to ensure teams work in lockstep. The plan encourages minimal viable agent designs that are constrained to specific tasks, which reduces risk and simplifies validation. In short, time-boxed execution converts strategic ambition into operational reality.

Core Principles of an Effective Agentic AI Deployment Plan

The following principles guide an effective agentic AI deployment plan. They should be embedded into the Rapid Deployment Kit and used at every decision point. Learn more in our post on Cost Modeling: How Agentic AI Lowers Total Cost of Ownership vs. Traditional Automation.

Outcome focus: Define specific business outcomes and measurable metrics before building. Avoid vague goals and focus on what constitutes success during each timebox.

Guardrails first: Establish safety, privacy, and compliance rules up front. Agents must have constraints, logging, and oversight to operate safely.

Minimum viable agent: Build the smallest agent that can prove the hypothesis. Start with narrow capabilities to reduce integration complexity.

Iterative validation: Use rapid feedback loops and test in controlled environments. Validate assumptions frequently and make go no-go decisions at each checkpoint.

Platform reuse: Use modular and reusable components such as connectors, observability pipelines, and governance patterns to accelerate subsequent deployments.

Clear ownership: Assign accountable owners for outcomes, model performance, data quality, and compliance. Clear responsibilities reduce coordination overhead.

30 Day Sprint: Fast Pilot and Proof of Concept

The first 30 days emphasize rapid discovery, hypothesis definition, and a focused pilot. The goal in this period is to validate the value proposition with a working prototype and measurable evidence. A concise agentic AI deployment plan for the first 30 days should include stakeholder alignment, a prioritized use case, data readiness checks, and a proof of concept that demonstrates baseline outcomes.

Key activities during the first 30 days include stakeholder workshops to align objectives and success metrics. Create a project charter that maps the desired outcome to measurable KPIs. Choose a use case that is high impact and low integration complexity. This could be a task automation scenario or a decision support agent that augments a specific team. Use the minimum viable agent concept to constrain scope and reduce risk.

Technical setup focuses on a safe sandbox environment, basic observability, and simple connectors to the required data sources. Implement initial logging and telemetry so that agent actions are auditable from day one. Establish a rollback plan and ensure the team can quickly disable the agent. During this sprint, conduct early testing with a controlled set of users to collect qualitative and quantitative feedback.

Deliverables at the 30 day checkpoint should include a working prototype, a results summary against the defined KPIs, an initial risk assessment, and a go-forward recommendation. If the pilot meets predefined success criteria, prepare to expand scope in the next timebox. If not, document learnings and iterate or pivot as needed.

30 Day Checklist

Define outcome and measurable success criteria

Select minimum viable agent use case

Provision sandbox and data connectors

Implement basic logging and telemetry

Run controlled user tests and gather feedback

Produce a pilot results report and risk assessment

60 Day Sprint: Expansion and Robustness

The second 30 day period builds on the pilot and focuses on robustness, broader user adoption, and deeper integration. The goal of the 60 day milestone is to refine the agent, scale its usage to more users or datasets, and harden governance and monitoring. This period also addresses performance optimization and operational readiness.

Begin by analyzing pilot telemetry to identify failure modes, friction points, and opportunities for improvement. Expand the test population progressively to ensure performance holds at scale. During the 60 day period, add necessary connectors and workflows so that the agent integrates with the broader operational environment. Strengthen data validation and implement transformation pipelines to improve data quality.

Governance activities intensify during this sprint. Finalize access controls and ensure audit logging is comprehensive. Implement alerting for anomalous agent behavior and set escalation paths for human intervention. Validate that privacy and compliance requirements are consistently met across new integrations. Update the agentic AI deployment plan artifacts to reflect lessons learned and incorporate improved fallback strategies.

Operational teams should work toward automation of routine tasks such as incident triage, retraining triggers, and model version control. Establish runbooks for common failure scenarios. Ensure the support organization is trained on how to interpret agent outputs and intervene when needed. At the 60 day checkpoint, present an updated performance report, an operational model, and a recommendation for scaling to production in the final 30 day period.

60 Day Checklist

Scale population and datasets gradually

Harden connectors and data validation

Finalize access controls and audit logging

Implement monitoring, alerting, and runbooks

Train operations and support teams

Update deployment plan with operational playbooks

90 Day Sprint: Production Rollout and Scale

The final 30 day period transitions the agent from controlled deployment to production scale. The objective is to operationalize the agent across targeted business units, embed it into live workflows, and ensure sustained governance. The 90 day milestone should deliver measurable business impact and a clear path to broader scaling.

Key activities include migrating configuration and models to production hardened infrastructure, ensuring redundancy and failover, and implementing full lifecycle management for models and agent logic. Complete integration testing with production systems, perform load testing, and validate performance against agreed service levels. Make sure access controls and data protections are enforced in production environments as they were during testing.

At this stage, build organizational capability for continuous improvement. Establish a cadence for evaluating agent performance, retraining schedules, and periodic compliance reviews. Create a roadmap for additional use cases that reuse the platform components and governance practices established during the first 90 days. Provide transparent reporting to stakeholders showing actual impact versus the original target metrics.

Close the 90 day loop with a comprehensive post implementation review. Document successes, shortcomings, and a prioritized list of enhancements for the next phase. If the agent meets or exceeds the success criteria defined in the agentic AI deployment plan, proceed to scale the solution across more teams and processes with the confidence that governance, monitoring, and operational playbooks exist to manage risk.

90 Day Checklist

Finalize production migration and redundancy

Perform integration and load testing

Implement lifecycle and version control for models

Set retraining and maintenance cadences

Provide stakeholder reporting and ROI analysis

Document runbooks and scaling roadmap

Security, Privacy, and Governance in the Agentic AI Deployment Plan

Security and privacy are not optional when deploying agentic AI at speed. The agentic AI deployment plan must define required controls and how they will be validated. This includes access management, data minimization, encryption in transit and at rest, and robust logging to support forensic analysis. The plan should include a clear process for approving agents to operate on sensitive data and for removing access if anomalous behavior is detected.

Governance is both a set of technical controls and a human process. Define roles for model owners, data stewards, and governance reviewers. Create a lightweight approval workflow that can gate changes without becoming a bottleneck. Establish criteria for when an agent must be paused for human review, and make the criteria objective and testable. Incorporate automated checks into the deployment pipeline so that governance is enforced programmatically where possible.

Privacy considerations must be embedded into design choices. Use data minimization and synthetic data where feasible for testing. Maintain traceability of data lineage so that any output can be traced back to inputs. Ensure that logging retains sufficient context for auditing while avoiding unnecessary exposure of personal data. Finally, plan for regular compliance audits and make remediation part of the continuous improvement process.

Governance Playbook Essentials

Define data classification and agent access rules

Automate policy checks in CI CD pipelines

Require explicable action trails for agent decisions

Set thresholds for human intervention and safe shutdown

Schedule periodic risk reviews and compliance audits

Technology, Architecture, and Observability Patterns

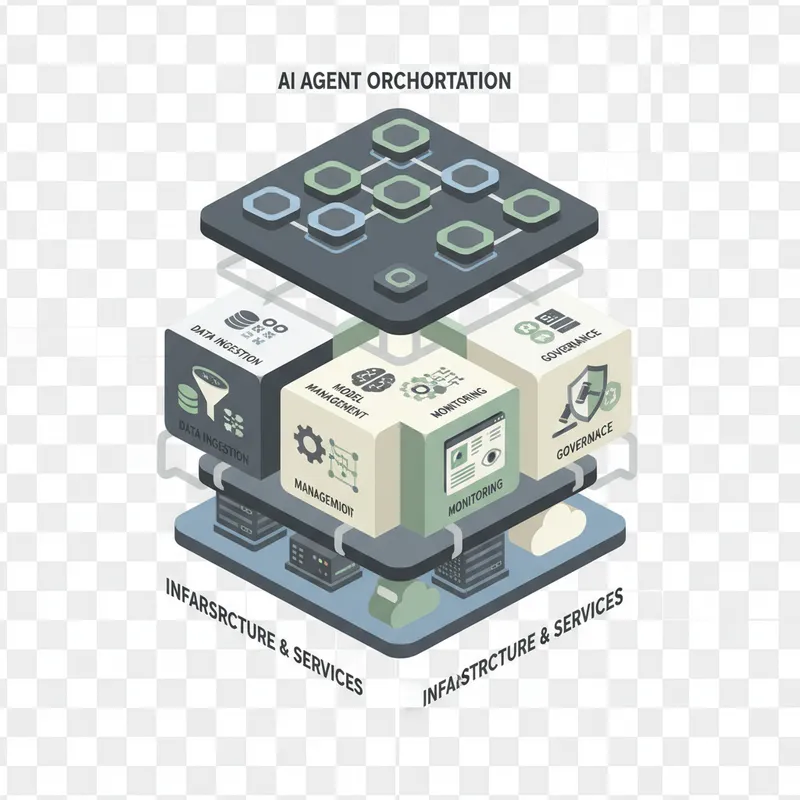

Technical architecture for an agentic AI deployment plan must prioritize modularity, observability, and resilience. Modular architecture separates the agent logic from integration adapters, data pipelines, and monitoring. This separation enables reuse across use cases and simplifies testing. Use containerization and orchestration for scalable deployments, and consider managed services where appropriate to reduce operational overhead.

Observability is essential. Instrument agents to capture inputs, decisions, confidence scores, and downstream effects. Aggregate telemetry into dashboards that correlate agent activity with business impact. Implement anomaly detection to surface unusual patterns in agent behavior and data drift. Integrate observability with incident management so that alerts trigger the right human workflows.

Design for graceful degradation. Agents should fail safe and provide human fallback options when confidence is low. Implement throttles and circuit breakers to protect upstream systems from unexpected load. Use versioned APIs and feature flags to enable incremental rollouts and quick rollbacks. The agentic AI deployment plan should include templates for these patterns so they are applied consistently across deployments.

Measuring Success and Demonstrating ROI

Measuring impact requires both leading indicators and outcome metrics. Define metrics that map directly to the business outcome identified at the start. For example, measure time saved, error reduction, throughput improvements, or revenue influenced. Use A B tests or canary rollouts where possible to validate causality. Include qualitative measures such as user satisfaction and adoption rate alongside quantitative KPIs.

Set reporting cadences that align with stakeholder expectations. Produce a concise dashboard that updates in near real time and an executive summary that highlights the bottom line impact. For the agentic AI deployment plan, the most persuasive evidence often comes from a combination of a successful pilot demonstrating statistical improvement and a realistic scaling plan that projects full value realization. Include sensitivity analysis to show upside and downside scenarios.

Finally, quantify operational costs and compare them against the value delivered. Include development, infrastructure, monitoring, and governance costs in the analysis. Present a clear payback timeline and outline how the platform and reusable components will reduce costs for future deployments. This makes investment decisions easier and supports continued funding for scaling.

Organizational Change Management and Adoption

Technology is only part of the equation. Adoption depends on people and processes. The agentic AI deployment plan must include a change management strategy that prepares users for new ways of working. Identify early adopters and subject matter champions who can validate functionality and evangelize benefits. Provide role based training that focuses on how agents change daily workflows and how to interpret agent outputs correctly.

Communication is critical. Share clear messaging that explains why the agent is being introduced, what success looks like, and how feedback will be used. Use town halls, demo sessions, and hands on workshops to build trust and momentum. Capture user feedback systematically and incorporate it into the product backlog so users see their input reflected in subsequent releases.

Consider incentives for adoption that align with organizational goals. Incorporate agent usage and outcomes into performance review discussions where appropriate. Monitor adoption metrics such as active users, frequency of use, and task completion rates. Use these metrics as leading indicators for long term value and adjust the rollout approach to address adoption barriers as they arise.

Scaling the Platform Beyond 90 Days

After a successful 90 day rollout, plan how to scale the agent platform responsibly. Reuse the components that worked well such as connectors, governance templates, and observability pipelines. Build a catalog of approved agent blueprints that streamline future deployments. Create a central platform team that provides core services and enables decentralization of use case development by business units.

Govern the platform with a lightweight operating model that balances central standards and local flexibility. Define clear onboarding paths for new teams, provide templates and training, and enforce baseline policy checks automatically. Establish a quarterly review where platform performance, security posture, and compliance status are evaluated. Use this cadence to prioritize platform improvements and to retire outdated components.

Invest in developer experience. Better tooling reduces build time and improves consistency. Provide SDKs, deployment templates, and observability libraries that make it easy to stand up new agents following the agentic AI deployment plan. Over time, a well governed and user friendly platform reduces marginal cost and increases the speed at which new value can be realized.

Common Pitfalls and How to Avoid Them

Many organizations stumble when scaling agentic AI. Common pitfalls include unclear success criteria, over engineering early prototypes, neglecting governance, and failing to plan for operational support. The agentic AI deployment plan addresses these pitfalls directly through time boxed validation, minimum viable agent designs, upfront guardrails, and operational runbooks.

Avoid feature bloat in the pilot stage by focusing on the smallest change that can produce measurable impact. Resist building broad autonomy before you have strong monitoring and human oversight. Ensure that stakeholders understand trade offs and are prepared to accept incremental progress rather than expecting perfect solutions at launch. Lastly, plan for change in team structure and processes as the agent moves to production so that long term support is sustainable.

Sample 90 Day Roadmap Template

Below is a condensed sample roadmap that follows the agentic AI deployment plan structure. Use it as a starting point and adapt milestones to your organizational context.

Days 1 to 7: Stakeholder alignment, use case selection, success metrics definition.

Days 8 to 14: Sandbox provisioning, data access approvals, initial connectors.

Days 15 to 30: Prototype build, controlled testing, pilot evaluation and go no go decision.

Days 31 to 45: Expand user set, improve data pipelines, enhance telemetry and logging.

Days 46 to 60: Harden governance, automate policy checks, finalize runbooks.

Days 61 to 75: Performance tuning, integration testing, load testing.

Days 76 to 90: Production migration, stakeholder reporting, scale planning.

Practical Tools and Templates to Include in Your Rapid Deployment Kit

To operationalize the agentic AI deployment plan, include templates that make execution repeatable. Suggested items are a project charter template, risk assessment checklist, data access request form, model performance dashboard template, runbook template, and a governance approval checklist. These artifacts streamline coordination and ensure the right questions are asked at each stage.

Also include sample queries and scripts for generating telemetry, a list of recommended metrics, and a decision rubric for human in the loop interventions. Provide training materials that can be localized to different teams. Packaging these materials as part of the Rapid Deployment Kit reduces ramp up time for subsequent pilots and helps maintain consistency across deployments.

Conclusion

Moving from experimentation to impact requires a packaged, time boxed approach that balances speed and safety. The Rapid Deployment Kit described in this article is an actionable agentic AI deployment plan designed to reduce time to value while limiting operational and compliance risk. By structuring work into 30, 60, and 90 day sprints teams can validate assumptions quickly, harden systems incrementally, and scale with confidence when outcomes meet defined success criteria. The plan emphasizes minimum viable agent development, strong governance, robust observability, and practical change management so that the technology delivers measurable business benefits.

Healthcare, financial services, manufacturing, and other industries face unique regulatory and operational constraints. A repeatable agentic AI deployment plan reduces uncertainty by codifying guardrails and patterns that work across contexts. Organizations that adopt a time boxed rollout usually see faster adoption because stakeholders can observe tangible benefits early and trust the controls that protect their operations. This trust is important because agentic AI introduces new paradigms in autonomy and decision making that require careful oversight as adoption grows.

Execution discipline matters. Clear roles, a prioritized backlog, and a focus on measurable outcomes are the levers that convert pilots into production scale deployments. Investments in modular architecture, telemetry, and developer experience pay dividends by lowering the marginal cost of future use cases. Equally important is the human element. Training, communication, and ongoing support ensure that users understand the agent as a collaborator rather than a black box. That mindset shift accelerates adoption and improves the quality of feedback that fuels subsequent iterations.

The agentic AI deployment plan also highlights the need for ongoing governance. Rather than treating governance as a one time checklist, integrate it into CI CD pipelines and decisioning flows. Automate policy checks where possible and maintain a regular cadence for audits and risk reviews. This approach ensures that agents remain aligned with organizational objectives and legal obligations as they evolve.

Finally, scale requires reusability. Capture what worked and create a catalog of blueprints, connectors, and monitoring patterns. Use the Rapid Deployment Kit to onboard new business units rapidly and apply lessons learned to accelerate future projects. With a disciplined agentic AI deployment plan organizations can reduce time to value, lower risk, and unlock sustainable operational improvements that compound over time.

If you are preparing to deploy agents at speed, start with a clear, time boxed pilot that maps directly to business outcomes. Use the 30, 60, 90 day framework to manage expectations, prove value, and build the platform capabilities needed for scale. That approach transforms agentic AI from a strategic ambition into a pragmatic engine of sustained impact.