The rise of agentic systems is changing how organizations conceive of work. In Q3 2025 the phrase agentic AI future of work has moved from theoretical debate to boardroom action. HR leaders and operations teams are no longer debating whether to explore autonomous software collaborators. They are redesigning roles, workflows, and governance to get measurable results this quarter. This perspective piece looks at what agentic AI does to operational layers, how it changes day to day responsibilities, and the practical steps HR and Ops teams should take in the next three months to secure impact. Learn more in our post on Cost Modeling: How Agentic AI Lowers Total Cost of Ownership vs. Traditional Automation.

The shift is not a simple upgrade to existing automation. Agentic AI future of work implies a new class of software that plans, negotiates, coordinates, and executes across systems with minimal human prompting. That capability reshapes how teams are organized, how tasks are assigned, and how performance is measured. The good news is that the transition is manageable when approached as an operational redesign rather than a point technology rollout. This article provides a strategic lens and an actionable checklist for Q3 2025 so HR and Ops move from pilots to production safely, ethically, and with measurable ROI.

What is agentic AI and why it matters now

Agentic AI refers to systems that can act autonomously to achieve goals, adapt to changing conditions, and coordinate across diverse tools and stakeholders. In practice these systems can handle workflows end to end, escalate issues, and request human input selectively. The expression agentic AI future of work captures both the technology and its organizational consequences. Instead of serving only as an assistant, agentic AI becomes a team member that owns tasks and outcomes. Learn more in our post on Custom Integrations: Connect Agentic AI to Legacy Systems Without Disruption.

Three changes make agentic AI particularly relevant in 2025. First the models and orchestration platforms matured to support safe autonomy. Second integration layers and APIs are now standard across enterprise systems which reduces friction for deployment. Third executives demand scalable impact and cost efficiency at pace. Those forces turned agentic AI future of work from an academic concept into a strategic priority. When leaders accept that software can carry operational authority, everything from staffing plans to procurement cadence shifts.

For HR and Ops, the question is not just can we use agentic AI, but how will it change the definition of a role. Job descriptions now must account for supervising, training, and collaborating with agentic systems. Performance frameworks must include outcomes produced jointly by humans and agents. The term agentic AI future of work helps teams focus on practical design choices rather than abstract fears. Addressing those design choices this quarter yields early wins and reduces rework later.

How agentic AI is reorganizing operational roles and workflows in 2025

Agentic AI rewrites the rules of operational work at three levels: task allocation, decision authority, and escalation patterns. At the task level routine, repeatable steps become prime candidates for delegation to agents. That moves human time toward higher value activities such as relationship management, complex problem solving, and strategic judgment. The result is a shift in headcount emphasis and skill mix across operations teams. Organizations that prepared for agentic AI future of work saw faster throughput and improved customer responsiveness. Learn more in our post on Continuous Optimization: Implement Closed‑Loop Feedback for Adaptive Workflows.

Decision authority is also recast. Agents can make deterministic decisions based on rules and data and can propose probabilistic recommendations for human approval. This hybrid model means clear boundaries are required. Who can override an agent? What are the thresholds for agent autonomy? Answering those questions reduces latency and clarifies accountability. The phrase agentic AI future of work reminds leaders that these boundaries are organizational decisions, not purely technical ones.

Escalation patterns change because agents can surface issues earlier and route them to the right human at the right time. Instead of a single operations inbox, teams may rely on an agentic layer that triages, bundles related issues, and recommends owners. That reduces context switching and improves resolution times. Transitioning to that model requires redefining handoffs, updating internal SLAs, and retraining staff to trust and work with the agentic layer.

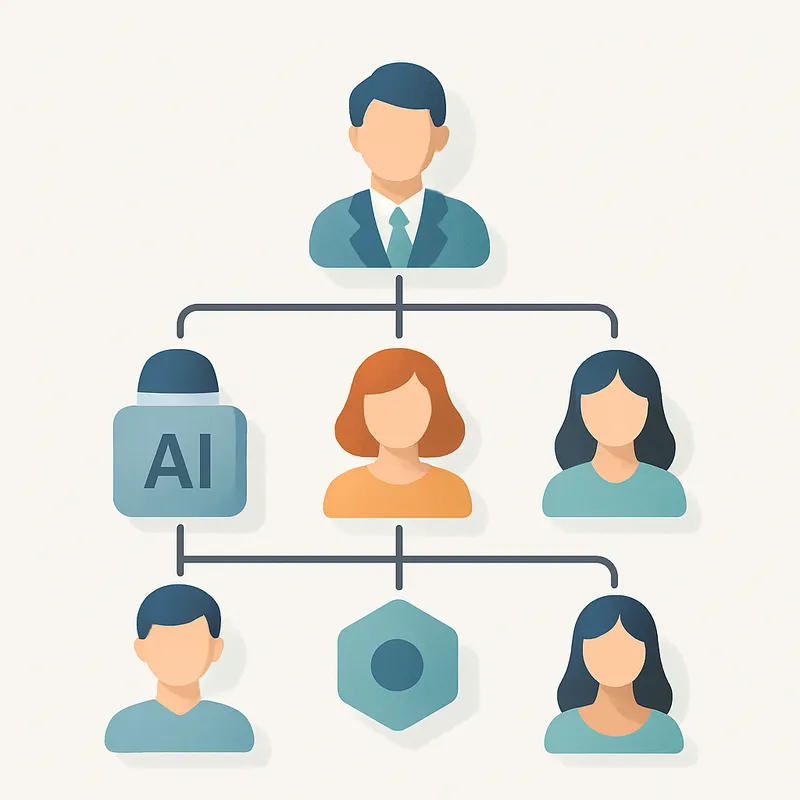

Organizational charts are being redrawn. New roles appear such as agent experience designers, agent performance analysts, and agent governance officers. Traditional roles absorb oversight responsibilities. The recurring theme across organizations embracing this shift is that agentic AI future of work is less about replacing people and more about augmenting capability and redistributing cognitive labor.

Representative changes by function

In operations teams routine approvals, scheduling, and status reporting are most affected. In HR, candidate screening, onboarding coordination, and policy interpretation are increasingly supported by agents. In finance basic reconciliation, exception handling, and vendor communications are delegated to agentic systems. Each of these changes affects how teams prioritize hiring and training. The concept agentic AI future of work helps HR and Ops translate those shifts into hiring plans, learning roadmaps, and revised KPIs.

Practical steps HR and Ops teams should take this quarter

Q3 2025 is an ideal moment to move from experiment to scale with agentic systems. Below are practical steps organized into assessment, design, implementation, and governance phases. Each step is actionable and can be completed or initiated within a quarter. Embracing agentic AI future of work now prevents late stage rework and positions teams for measurable results.

Assessment. Begin with a focused audit of workflows that are high volume, rule based, and have clear outcomes. Map tasks by time spent, error rate, and dependency complexity. Identify low risk pilots where agents can reduce cycle time without exposing critical business rules. Use the audit to prioritize 3 to 5 pilot workflows for the quarter. Framing the audit around agentic AI future of work helps stakeholders see the transition as strategic rather than experimental.

Design. For each prioritized workflow define clear decision boundaries, success metrics, and human in the loop touchpoints. Create role statements that specify who trains the agent, who reviews its outcomes, and who is accountable for exceptions. Draft a runbook that explains how to escalate issues. Building these artifacts up front prevents confusion during rollouts. When teams refer to agentic AI future of work in design sessions they align on both technology and responsibility.

Implementation. Start small and instrument thoroughly. Deploy agents in a shadow mode where they operate in parallel to humans and offer recommendations without taking final action. Collect performance data and measure improvement in throughput, accuracy, and employee experience. For live deployments apply feature flags and phased rollouts to limit blast radius. Communication to staff should be transparent about what agents will do and how progress will be measured. Incorporating agentic AI future of work into internal comms reduces anxiety and builds adoption.

Governance. Establish a governance forum that meets weekly during rollouts and then transitions to monthly oversight. The forum should include HR, Ops, legal, security, and a technical owner. Define policies for data access, consent, explainability, and audit logs. Document the conditions under which an agent must pause its autonomy. Governance ensures that agentic AI future of work becomes a durable capability rather than a series of orphaned pilots.

Quick checklist for Q3

Complete a 2 week workflow audit focused on volume and risk.

Choose 3 pilot workflows with measurable KPIs.

Create role definitions that include agent supervision duties.

Deploy agents in shadow mode and instrument outcomes.

Stand up a cross functional governance forum.

Plan a 6 month upskilling curriculum for affected teams.

These steps form a cohesive quarter plan that turns early curiosity about agentic AI future of work into concrete operational improvements. While technical teams handle integration, HR and Ops drive adoption and governance which are essential for sustainable results.

Redesigning org charts, governance, skills and metrics

Adoption of agentic tools requires structural changes. Traditional org charts assume fixed human resources. The agentic AI future of work requires org charts that include nonhuman actors and collaborative nodes. That means documenting which teams own which agents, how agents interface with human roles, and how responsibility flows when agents are unavailable. Visual org artifacts that incorporate agents make roles and responsibilities explicit.

Governance frameworks must cover lifecycle management, ethical constraints, and incident response. Lifecycle management includes version control for agent behavior, retraining cadence, and decommission criteria. Ethical constraints govern boundaries like prohibited actions, nondiscrimination checks, and privacy safeguards. Incident response clarifies who takes control when an agent behaves unexpectedly. Embedding these governance items into standard operating procedures makes agentic AI future of work operationally safe.

Skills and hiring practices evolve too. The most valuable skill sets include agent supervision, prompt engineering at the process level, agent performance analysis, and change management. HR needs to inventory existing skills, identify gaps, and design accelerated training. Apprenticeships and rotational programs that pair human operators with agents accelerate learning. A shared competency framework labeled under agentic AI future of work helps recruiters identify candidates who can work fluidly with autonomous systems.

Metrics need to reflect joint human-agent performance. Classic metrics like time to resolution, error rate, and customer satisfaction remain important but must be attributed properly. For example measure the percentage of tasks completed autonomously, the rate of successful handoffs, and the time humans spend on exceptions. Those measures tell you whether agents are improving throughput or simply shifting pain points. Regularly review metrics with stakeholders to align incentives and avoid local optimizations that harm system level performance.

Compensation and career path implications

As agents absorb routine work compensations may need recalibration. When job content changes, job families and pay bands should be reviewed. It is also important to map career ladders that include agent-related competencies. Create senior specialist tracks for agent performance managers and recognize expertise in orchestrating complex human-agent workflows. Doing so makes agentic AI future of work a career catalyst rather than a threat.

Change management, adoption and ethics for operations teams

Successful adoption requires both technical change management and cultural work. Start by creating a coalition of early adopters who can pilot agentic workflows and share results with peers. Document early wins in both productivity and employee experience. Use those cases to create a library of standard operating models for common workflows. Framing agentic AI future of work as a productivity and quality play increases buy in across teams.

Training is not optional. Provide role based learning modules that cover how to interact with agents, how to audit agent outputs, and how to drive continuous improvement. Simulation labs where staff practice with agents in realistic scenarios accelerate comfort and competence. Pair learning with certification so teams can demonstrate readiness to assume higher levels of agent oversight.

Ethics and trust are central. Agents that intervene in hiring, employee evaluation, or customer credit decisions need high transparency and traceability. Provide explanations of agent decisions and ensure easy access to human review. Regular bias and fairness audits should be scheduled. Those practices build trust and help the organization avoid regulatory and reputational risk in the era of agentic AI future of work.

Communication tactics to reduce fear and resistance

Communicate early, often, and with clarity. Explain what agents will do, what they will not do, and how they will impact roles. Share timelines and realistic expectations. Invite employees to pilot and provide feedback. Celebrate wins that result from human-agent collaboration. Narrative framing that positions agentic AI future of work as an enabler of better work rather than a replacement tool reduces resistance and preserves morale.

Case examples and a playbook for Q3 2025

Below are three short examples that illustrate practical implementations of agentic systems across common operational areas. Each example includes objective, approach, metrics, and lessons learned. These simplified cases are representative and can be adapted to most mid sized to large organizations pursuing agentic AI future of work this quarter.

Case 1: Customer operations. Objective: Reduce average response time and improve first contact resolution. Approach: Deploy an agentic layer that triages inbound inquiries, resolves low complexity cases autonomously, and bundles complex cases for specialist teams. Metrics: average response time, first contact resolution rate, escalation rate. Lessons: Start with a narrow scope, invest in accurate routing, and create clear fallback rules so agents route to human teams when confidence is low. The agentic AI future of work outcome is faster service and higher specialist focus time.

Case 2: HR onboarding. Objective: Shorten time to productivity for new hires. Approach: Use agents to orchestrate onboarding tasks such as account provisioning, scheduling orientation, and collecting paperwork. The agent coordinates across systems and nudges relevant human approvers only when exceptions occur. Metrics: days to productivity, completion rate of onboarding tasks, new hire satisfaction. Lessons: Integrate with identity systems and ensure agents respect privacy boundaries. For HR teams the agentic AI future of work improvement was consistent onboarding experiences and fewer missed tasks.

Case 3: Procurement operations. Objective: Reduce PO cycle time and improve vendor responsiveness. Approach: Deploy agents to validate requisitions, match invoices to contracts, and manage status updates. Agents negotiate predefined discount terms and escalate exceptions to category managers. Metrics: PO cycle time, invoice dispute rate, vendor satisfaction. Lessons: Codify negotiation parameters and audit agent negotiations regularly. Procurement teams saw cost and time savings while preserving human oversight for strategic exceptions which embodies agentic AI future of work in action.

For each case the playbook steps are the same: identify target workflows, define autonomy boundaries, pilot in shadow mode, measure outcomes, and scale with governance. This repeatable process transforms curiosity about agentic AI future of work into predictable operational value.

Risks, pitfalls and how to mitigate them

Adoption comes with risks. Over trusting agents without proper oversight, misconfiguring decision boundaries, and neglecting employee experience are common pitfalls. Mitigations include conservative autonomy thresholds at launch, mandatory human review for sensitive decisions, and comprehensive monitoring. Regular post deployment audits identify drift in agent behavior and ensure alignment with policy. When teams frame these risks within the agentic AI future of work narrative they are more likely to invest in controls that protect value.

Another risk is misaligned incentives. If teams are measured only on throughput they may over rely on agents without attention to quality. Align metrics across human and agent contributions and include quality and fairness checks. Governance should have the authority to pause or roll back agents that do not meet standards. Embedding remediation pathways reduces operational exposure while preserving speed of innovation in the agentic AI future of work.

Data practices are a critical area. Agents require data for decision making and training. Implement strict data minimization, access controls, and logging. Anonymize or synthesize sensitive data for training when possible. Ensure that data retention policies are clear and enforced. Combining strong data hygiene with continuous monitoring prevents misuse and protects employee and customer trust as agentic AI future of work scales.

Measuring success and iterating beyond Q3

Define a measurement plan before deployment. Include leading indicators that predict long term value and lagging indicators that show realized ROI. Leading indicators include agent accuracy, escalation rates, and time to autonomous decision. Lagging indicators include cost per transaction, customer satisfaction, and employee engagement. Review these metrics weekly during the first 90 days and then monthly as the program stabilizes. The agentic AI future of work requires this cadence to ensure ongoing alignment.

Iterate based on feedback loops. Use a continuous improvement cycle where human operators provide corrections, which feed into retraining and rule updates. Maintain a backlog of improvements prioritized by impact and risk. Over time this cycle raises agent capability and reduces reliance on human oversight. Successful organizations create internal centers of excellence that capture patterns and templates so new teams can adopt proven designs without repeating mistakes in their agentic AI future of work journey.

Plan for scaling beyond Q3. Once pilots demonstrate durable value, create a roadmap for expanding agentic responsibilities across additional workflows, regions, and business units. Anticipate increased demand for integration and governance resources and budget accordingly. Mature programs adopt platform level standards for agent development and lifecycle management which enables predictable scale and reduces duplicated effort across the organization committed to the agentic AI future of work.

Conclusion

Agentic AI is no longer a theoretical element of enterprise strategy. In Q3 2025 the agentic AI future of work is manifest in operational redesigns that deliver measurable results. The distinction between automation and agency matters. Agentic systems act proactively, coordinate across systems, and require new modes of oversight. For HR and Ops teams the practical implication is a shift from executing tasks to orchestrating mixed human-agent workflows. That shift requires new role definitions, governance frameworks, skills programs, and measurement systems to ensure the technology amplifies human capability rather than introducing unmanaged risk.

This quarter organizations that treat agentic adoption as an ops redesign rather than a tech experiment will take the lead. The path begins with focused workflow audits, narrow pilots in shadow mode, and rigorous instrumentation. Clear decision boundaries and a governance forum reduce operational risk. Investing in role based training and defining career pathways that include agent supervision ensures that employees see agents as colleagues with predictable benefits. Measurement plans that blend leading indicators and business outcomes make it possible to show value quickly and reinvest in scaling the capability.

Operational leaders must also account for ethics, data governance, and incentive alignment. Agents that touch hiring, evaluation, or customer outcomes demand extra transparency and review. Data practices must be airtight to protect privacy and enable safe retraining. Aligning incentives across teams prevents short term optimizations that damage system level outcomes. By treating these topics as integral to deployment HR and Ops teams create durable trust and avoid costly rollbacks when matured agentic systems are scaled.

Finally, the organizations that succeed will be those that view agentic systems as partners in work design. They will embed agents into org charts, design career ladders that reward agent orchestration skills, and build governance that balances speed with safety. The agentic AI future of work is here and the next quarter is a decisive window. By following a clear playbook of assessment, design, implementation, and governance, teams can move from pilots to production with confidence and create lasting operational advantage.