Continuous workflow optimization is becoming essential for organizations that want to keep processes efficient, resilient, and aligned with evolving business goals. In this guide you will find a practical, actionable approach to designing closed-loop systems where agents learn from operational telemetry and KPI feedback. The goal is to enable automated and human-assisted adjustments that improve throughput, reduce waste, and maintain service quality through Q4 and beyond. This post covers the architecture, instrumentation, learning mechanisms, KPI design, rollout strategies, and governance needed to embed continuous feedback into your workflows.

You will learn how to collect the right data, how agents should interpret signals, how to safely run experiments, and how to turn insights into process changes that maintain compliance and explainability. The emphasis is on systems that iterate frequently without creating operational risk. The techniques apply to automation pipelines, RPA, data processing, customer support routing, and hybrid human plus machine workflows. Throughout the article you will find step by step recommendations and checklists you can apply immediately to start a program of continuous workflow optimization.

Why closed-loop feedback is the foundation of continuous workflow optimization

Closed-loop feedback turns static automation into adaptive systems that learn from real operations. Instead of deploying a workflow and letting it degrade over time, closed-loop systems observe outcomes, compare them to targets, and trigger adjustments. This is central to continuous workflow optimization because it shortens the time between signal and action. When telemetry and KPI feedback feed back into decision logic, systems self-correct and teams focus on higher value improvements. Learn more in our post on Cost Modeling: How Agentic AI Lowers Total Cost of Ownership vs. Traditional Automation.

There are practical benefits to adopting closed-loop feedback. First, responsiveness increases. When a performance regression is detected, the system can either alert human operators or automatically apply a guarded mitigation. Second, resource consumption optimizes. Feedback about latency, error rates, and utilization allows dynamic scaling and routing. Third, quality and compliance improve because metrics tied to policy and safety are continuously monitored and enforced. These outcomes make continuous workflow optimization measurable and defensible.

Closed-loop feedback is not about replacing human judgment. It is about empowering operators with better situational awareness and automating routine corrective actions. To succeed you need a clear feedback taxonomy, reliable telemetry, well designed KPIs, and controlled learning loops that prioritize safety and traceability. Without these elements, feedback can produce oscillation or unsafe adaptations which undermine the benefits of continuous workflow optimization.

Core architecture for continuous workflow optimization

Designing a closed-loop system starts with a modular architecture that separates observation, decision, and execution. Observation collects telemetry and event data. Decision systems evaluate that data and select actions. Execution applies changes to workflows, feature flags, or resource allocations. This separation enables independent scaling and governance and supports repeatable testing strategies that are essential for continuous workflow optimization.

Key components of a closed-loop architecture include:

Telemetry layer: Collects logs, metrics, traces, and business events. It standardizes and enriches data so downstream systems can reason about it.

Data pipeline and storage: Aggregates telemetry into time series stores, event streams, and feature stores. This layer supports historical analysis, model training, and real time evaluation.

Decision engine: Hosts policies, rules, and learned models. It evaluates signals against KPIs and recommends or enacts actions.

Action layer: Executes changes via APIs, orchestration platforms, and feature flags. It maintains audit trails and rollback capabilities.

Human-in-the-loop interfaces: Provide approvals, context, and overrides for actions that require human judgment.

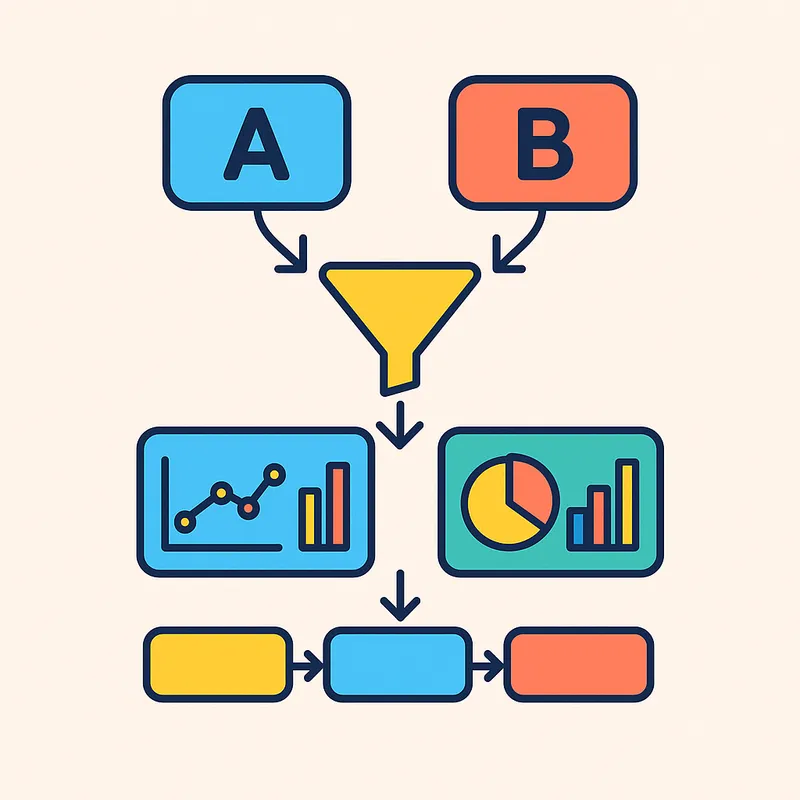

Experiment and evaluation platform: Runs A/B tests and controlled rollouts to validate improvements before wide release.

The architecture must be resilient and observable. For continuous workflow optimization the decision engine should expose rationale and confidence scores to enable debugging and post action analysis. Traceability from telemetry to the action executed and the resulting KPI change is essential so teams can learn from each iteration and prevent repeated mistakes.

Instrumentation strategies to capture the right signals

Instrumentation is the foundation of effective closed-loop feedback. Good telemetry provides the timely, accurate, and contextual signals that decision systems need. Without targeted instrumentation, your continuous workflow optimization program lacks the raw material for learning. Start by mapping high level KPIs to lower level signals and events. For example if your KPI is end to end latency, instrument each hop, queue, and processing step that contributes to latency. Learn more in our post on Custom Integrations: Connect Agentic AI to Legacy Systems Without Disruption.

Practical instrumentation tips:

Define KPI-to-signal mappings so every KPI can be decomposed into observable metrics and events.

Implement structured logging with consistent fields for correlation ids, user ids, version ids, and timestamps.

Capture sampling traces for distributed processes so root cause analysis is possible without excessive overhead.

Emit business events that represent outcomes, not just system states. Outcomes are what drive learning for continuous workflow optimization.

Tag telemetry with feature and model version metadata to link behavioral changes to code and model updates.

Telemetry must be accessible in near real time for fast moving loops and captured historically to support offline training and root cause analysis. For many teams this means combining streaming platforms with long term stores and a feature store that serves production models. Prioritize consistent schemas and retention policies to prevent data debt that will slow down optimization efforts.

Designing learning loops and agent behaviors

Learning loops are the mechanism by which systems improve over time. A learning loop observes performance, computes adjustments, evaluates the impact, and updates the decision model or policy. For continuous workflow optimization the loop must be safe, measurable, and incremental. Design loops with explicit objectives, constraints, and evaluation windows so they converge rather than oscillate.

Start with simple policies and gradually introduce learning agents. A recommended progression is:

Rule based monitoring with alerting and manual remediation.

Automated mitigations with human approval gates.

Policy driven adjustments using weighted heuristics and feature flags.

Bandit algorithms for low risk parameter tuning.

Reinforcement learning or adaptive control with strict safety layers for environments where risk is acceptable and metrics are dense.

Agents should expose confidence scores and be constrained by safe action spaces. Implement circuit breakers and rate limits to prevent runaway behavior. Every action taken by an agent must be observable and reversible. For continuous workflow optimization it is better to favor smaller, frequent adjustments with rapid measurement rather than large infrequent changes that are hard to attribute.

Logging the decision path and model inputs for every automated action creates a rich dataset for improving agents. Use that dataset to retrain models and to refine features. Incorporate domain knowledge through features and reward shaping so agents align with human intentions. Continuous improvements rely on hybrid systems where models propose actions and humans refine goals and constraints.

KPIs, experiments, and measurement for robust optimization

Good KPIs are actionable, decomposable, and tied to real business value. For continuous workflow optimization select primary metrics that reflect end user outcomes and a set of guardrail metrics that protect system health and compliance. Avoid metric sprawl by prioritizing a small set of leading indicators and lagging outcomes.

Design experiments to validate changes. A rigorous experiment framework supports causal inference and prevents false positives. Use randomized trials where possible. When randomization is not feasible, use difference in differences or matched cohorts. For every experiment define the hypothesis, the primary metric, guardrails, statistical significance thresholds, and rollout criteria. Track experiment health in real time and abort if guardrails are violated.

Common KPI categories for continuous workflow optimization include:

Performance metrics: latency, throughput, error rates.

Cost metrics: compute cost per transaction, storage cost per event.

Quality metrics: accuracy, precision, false positive and false negative rates.

Business outcomes: conversion rate, time to resolution, revenue per user.

Operational health: mean time to detect, mean time to recover, incident frequency.

When calibrating agents, use evaluation windows appropriate to the metric cadence. For slow moving business outcomes you may need longer windows and stronger causal inference methods. For system level metrics shorter windows allow faster iteration. Maintain a lessons log where experiment outcomes and anomalies are recorded to accelerate organizational learning for continuous workflow optimization.

Governance, safety, and explainability

Persistent optimization without governance can create drift and unintended consequences. Set up governance that balances velocity with safety. Governance should define approval processes for agent capabilities, required monitoring thresholds, audit requirements, and criteria for human overrides. For continuous workflow optimization governance is not a gate that blocks change. It is a scaffolding that ensures continuous change delivers net positive outcomes.

Explainability matters for trust and compliance. Every automated decision should be annotated with a reason or a confidence estimate that operators can review. Where regulatory constraints apply, store decision artifacts and provenance. Design playbooks for incident response and rollback so operators can act quickly when a learning loop produces unintended outcomes.

Security and privacy must be embedded into telemetry and learning pipelines. Avoid logging sensitive data in plain text. Use anonymization, hashing and tokenization where appropriate. Ensure access controls, encryption at rest and in motion, and data retention policies align with corporate requirements. For continuous workflow optimization, privacy preserving feature engineering and synthetic data generation can enable learning without exposing personal data.

Operational roadmap to Q4 for continuous improvements

To make steady progress schedule a pragmatic roadmap with quarterly and monthly milestones. Through Q4 focus on establishing telemetry hygiene, implementing a basic closed-loop cycle, and running a series of controlled experiments that deliver measurable improvements. Prioritize impact and risk reduction so each iteration proves value without destabilizing core operations.

Example roadmap for a three month Q4 cycle:

Month 1: Instrumentation sprint. Map KPIs, deploy structured logging, and centralize observability. Validate telemetry completeness with targeted audits.

Month 2: Decision and action prototypes. Implement a decision engine that can trigger guarded actions via feature flags. Run first set of experiments on low risk parameters.

Month 3: Scale rollout and governance. Expand closed-loop coverage to higher impact workflows, formalize governance, and publish runbooks and SLAs for the optimization program.

Weekly cadences should include metric reviews, experiment planning, and incident retrospectives. Use a lightweight playbook that defines who owns each metric, who approves experiments, and how escalations occur. This structure reduces friction and keeps momentum for continuous workflow optimization.

For long term success schedule quarterly architecture reviews to assess model drift, data quality, and technical debt. Allocate time for cleanup and refactoring to prevent the accumulation of hidden costs that degrade the return on optimization investments.

[image: Photo of a cross functional team planning roadmap on a whiteboard | prompt: A candid photo realistic image of a cross functional team in a bright conference room planning a roadmap on a whiteboard with sticky notes and laptops, natural daylight, shallow depth of field, diverse team, professional atmosphere, no visible logos or text]

Tooling patterns and selection criteria

Choose tools that align with your architecture and organizational skills. For continuous workflow optimization look for platforms that offer streaming ingestion, feature stores, model evaluation, policy engines, and experiment management. Prioritize interoperability and open standards so components can be replaced as needs evolve. Avoid vendor lock in for critical data paths that will be used for learning and governance.

Selection criteria checklist:

Integration capabilities with existing telemetry and orchestration systems.

Support for real time and batch evaluation of decision logic.

Experimentation capabilities with traffic splitting and rollbacks.

Auditability and provenance for decisions and data.

Security controls and compliance features.

Scalability and cost transparency.

Adopt a hybrid approach where managed services handle heavy lifting and lightweight open source or in house components manage business logic and governance. This pattern accelerates implementation while preserving control over sensitive decisions and data.

Operational playbook and checklists

Operationalizing continuous workflow optimization requires repeatable playbooks. Create checklists for each stage of the loop so teams can rapidly validate assumptions and respond to anomalies. A few essential playbooks are:

Telemetry validation checklist: schema adherence, missing fields, sampling rates, latency of ingestion.

Experiment readiness checklist: hypothesis, sample size calculations, guardrails, monitoring hooks, rollback criteria.

Automated action checklist: precondition checks, safety constraints, audit trail, operator notification.

Incident response checklist: detection thresholds, communication plan, rollback steps, postmortem owner.

Embed these checklists in your runbooks and automation so that playbooks are easily executed under pressure. Use templates for experiment definitions and postmortem reports to capture learning. Continuous learning is central to continuous workflow optimization, and checklists ensure knowledge is retained and reused.

Common pitfalls and how to avoid them

Teams often encounter similar obstacles when building closed-loop feedback systems. Common pitfalls include poor data quality, unclear KPIs, overfitting to transient patterns, and insufficient governance. Each can be mitigated with deliberate practices.

Data quality issues: Enforce schemas, run data quality checks, and instrument end to end tests that validate telemetry.

Unclear KPIs: Map KPIs to stakeholders and outcomes. Maintain a small set of prioritized metrics and align incentives.

Overfitting and drift: Use proper validation, shadow testing, and drift detection. Retrain models on representative historical windows and monitor production performance.

Governance gaps: Establish approval processes, audit trails, and safety constraints before enabling automated actions at scale.

Address cultural barriers by creating cross functional teams that include operators, data scientists, and product owners. Continuous workflow optimization requires collaboration across disciplines so the system reflects both technical realities and business priorities.

Getting started checklist

Begin with a focused pilot that targets a high impact, low risk workflow. Use the following checklist to launch a pilot program:

Choose a single workflow and define success metrics tied to business outcomes.

Instrument the workflow end to end and validate data quality.

Implement a minimal decision engine that can recommend or apply guarded actions.

Run controlled experiments to validate adjustments and collect learning data.

Establish governance, audit logging, and rollback procedures.

Document lessons and plan a phased expansion to additional workflows.

This incremental path enables teams to demonstrate value quickly and expand the scope of continuous workflow optimization with confidence.

Conclusion

Continuous workflow optimization is a strategic capability that turns static automation into an adaptive advantage. By implementing closed-loop feedback you enable systems to learn from telemetry and KPI outcomes and to improve over time in a controlled, measurable way. Start with clean instrumentation and a modular architecture that separates observation, decision, and action. Build learning loops that are incremental and safe. Design KPIs that reflect real business outcomes and pair them with guardrail metrics that protect operations and compliance.

Operational success depends on governance, explainability, and reproducible playbooks. Teams should prioritize auditability and human oversight while automating low risk adjustments. Experimentation and rigorous evaluation are the engines of improvement. Use randomized trials where possible and practical alternative designs where randomization is not feasible. Maintain a cadence of experiments, weekly metric reviews, and quarterly architecture checks as part of a disciplined roadmap that leads into Q4 and beyond.

Tooling should focus on interoperability and transparency. Implement a hybrid stack that leverages managed services for scale and in house components for business critical logic. Always tag signals with version metadata and maintain a lessons log for institutional learning. Organizationally, align cross functional teams around the most important KPIs and give them the authority to run experiments and apply changes. Start small with a pilot, validate value, then scale the approach across workflows.

Remember that continuous improvement is iterative. Expect early experiments to reveal data gaps and governance needs. Treat those discoveries as progress. Over time you will accumulate a body of learnings that accelerate insights and reduce risk. With disciplined instrumentation, well designed learning loops, and clear governance you will create resilient adaptive workflows that deliver measurable improvements and keep pace with changing customer needs and business priorities. Continuous workflow optimization is not a one time project. It is a capability that, when institutionalized, yields compounding returns through faster learning cycles and safer automation. Begin with a focused pilot, instrument thoroughly, iterate with care, and embed continuous feedback as part of your operational fabric to realize the full benefits of adaptive workflows going into Q4 and beyond.