Introduction

Customer service leaders are under constant pressure to deliver faster, more consistent support while keeping costs under control. A promising path forward is to design autonomous AI agents that manage routine interactions, escalate complex issues, and learn from feedback to raise customer satisfaction. This playbook focuses on the practical steps organizations can take to capture the benefits of autonomous AI agents in customer service operations, from early design decisions to metrics that matter for long term improvement. Learn more in our post on Sustainability and Efficiency: Environmental Benefits of Automated Workflows.

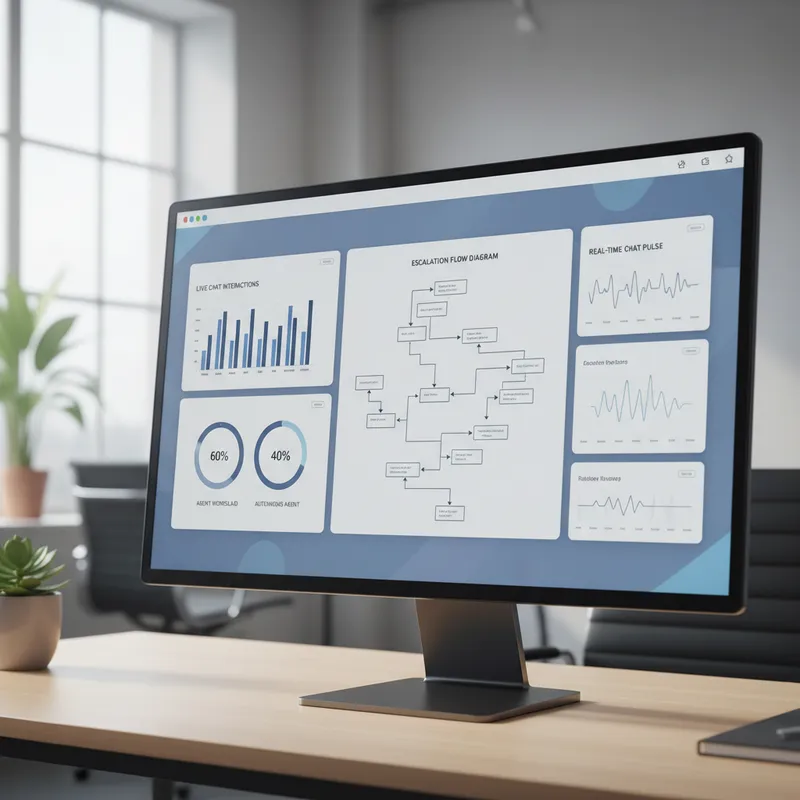

In this guide you will find a strategic framework and concrete implementation patterns that combine policy, architecture, and human oversight. The emphasis is on predictable, measurable outcomes: reducing average handle time, improving first contact resolution, and boosting CSAT. You will also see how to set safe escalation boundaries so agents remain assertive within scope without risking poor customer experiences. Whether you are exploring pilot projects or scaling to enterprise wide automation, these recommendations aim to help you realize the benefits of autonomous AI agents while keeping risk and operational friction low.

Why Autonomous Agents Matter for Customer Service

Autonomous AI agents are changing the service playbook by handling high volume, low complexity tasks that historically consumed agent time. This shift allows human agents to focus on high value interactions such as complex troubleshooting, relationship building, and retention conversations. One of the most compelling benefits of autonomous AI agents is improved staffing elasticity. During peak times an autonomous agent can manage routine queues, reducing reliance on temporary hires or overtime. Learn more in our post on Best Practices: Designing Safe Reward Functions and Constraints for Autonomous Agents.

Beyond cost and capacity, autonomous agents offer standardization in responses. Consistent, policy aligned answers reduce variance that harms customer trust. Another clear advantage is speed. For straightforward inquiries like order status, password resets, or billing confirmations, autonomous agents can deliver instant responses and close inquiries without human involvement. These performance improvements typically translate into higher CSAT and net retention metrics.

Finally, the benefits of autonomous AI agents extend to knowledge retention and onboarding. Well designed agents encode institutional knowledge and make it accessible 24 7, which shortens ramp time for new human agents and reduces the incidence of incorrect or outdated guidance circulating in the system. This contributes directly to operational resilience.

Core Design Principles for Reliable Autonomous Agents

Designing autonomous agents for customer service requires a balance of autonomy and control. The first principle is explicit scope definition. Map the tasks an agent can and cannot perform. Start with a small set of high volume, low risk interactions. Clear scope prevents overreach, which in turn reduces customer frustration and regulatory exposure. Learn more in our post on Autonomous Agents to Fix Inefficient Business Processes: Case-Ready Services.

The second principle is intent clarity. Build robust intent classification and slot filling that prioritizes accuracy over broad coverage early on. A misrouted interaction is worse than a quick escalation. Use confidence thresholds and fallback strategies to ensure the agent gracefully hands off ambiguous cases to human agents. These thresholds should be dynamic and updated as models improve.

The third principle is policy as code. Encode compliance, tone, and escalation rules in machine readable policy modules so agents apply the same constraints as human teams. Policies should include data handling rules, consent checks, and sensitive topic detection. Treat policies as living artifacts that can be audited and versioned to meet governance requirements.

The fourth principle is explainability and traceability. Each autonomous decision should include a lightweight rationale that the system stores with the interaction record. This trace makes it faster for humans to review escalations, train models, and resolve customer disputes. Explainability also helps internal stakeholders trust agent behavior and accelerates adoption.

Architecture and Technical Components

Building autonomous agents requires a modular architecture. At a minimum, you will need a natural language understanding layer, dialogue manager, action execution layer, and feedback loop. Separate these components so you can iterate on language models independently from business rules and backend connectors.

The NLU layer should support both intent detection and entity extraction with confidence scores. Use hybrid approaches that combine rule based recognizers with statistical models to capture edge cases. The dialogue manager orchestrates the conversation flow, applies policy checks, and decides whether to produce an automated response or escalate. It should be stateful and maintain context across messages and channels.

The action execution layer interfaces with downstream systems such as CRM, billing, and order management. Design idempotent actions and explicit confirmation steps for any irreversible operations. Use sandboxed connectors for early testing and a feature flag system to limit the agent to a subset of customers or channels until you are confident in its behavior.

The feedback loop is essential for continuous improvement. Capture structured feedback from customers and human reviewers after interactions. Feed this data into both supervised retraining pipelines and offline analysis. Prioritize feedback that correlates with CSAT delta and first contact resolution rates so you iterate on the most impactful areas first.

Security, Privacy, and Compliance

Security and privacy cannot be an afterthought. Autonomous agents will handle personal data, and you must ensure they do so in compliance with regulations and your internal policies. Implement data minimization, encryption in transit and at rest, and role based access controls. Mask or redact sensitive fields in both logs and model training data unless explicit consent and legal basis exist.

Auditability is equally important. Maintain immutable logs of agent decisions, the inputs that shaped those decisions, and any model versions used. These logs support incident investigation and regulatory reporting. Finally, consider privacy preserving techniques such as differential privacy or federated learning when training models on sensitive customer data.

[hints: include next image suggestion here after roughly 800 words]

Interaction Strategies and Escalation Patterns

Successful autonomous agents follow well defined interaction patterns that reduce friction and preserve trust. One effective pattern is the triage funnel. The agent first validates identity and intent, then attempts to resolve the request using a compact set of scripted actions and knowledge base lookups. If the confidence score falls below a threshold, the agent escalates to a human with a summarized context packet.

Context packets should include the user profile, the relevant conversation history, extracted entities, and the agent rationale for the decision. This reduces the time human agents spend understanding the issue and increases the likelihood of first contact resolution after escalation. Keep the context packet concise and highlight any actions already attempted to avoid duplication of effort.

Another pattern is progressive disclosure. For complex transactions, the agent breaks tasks into small, confirmable steps and verifies each step with the customer. This reduces error rates and improves perceived control. For example, when updating sensitive account settings, an agent should confirm identity, present the requested change, ask for explicit consent, and produce a confirmation message with next steps.

Design escalation boundaries carefully. Use rule based checks for legal or financial thresholds and model driven checks for sentiment and frustration signals. Agents should escalate proactively when they detect customer dissatisfaction or when a required system action returns an error. A smooth handoff includes a human friendly summary and an option for customers to continue the conversation with the agent after the human ends the call if they prefer.

Training, Continuous Learning, and Feedback Loops

Autonomous agents must learn from new data in a controlled manner. Establish a continuous learning pipeline that separates data collection, labeling, validation, and staged model deployment. Start with a human in the loop where uncertain predictions are flagged for review. Over time move the most reliable patterns to fully autonomous operation.

Collect feedback at two levels. First collect explicit customer feedback such as post interaction CSAT ratings and survey comments. Second collect implicit signals such as reopens, follow up messages, and escalation rates. Combine these signals to compute an impact score for each interaction type. Prioritize retraining on flows that show a high correlation between agent performance and CSAT improvement.

Use active learning to focus annotation effort. When the agent encounters low confidence or contradictory signals, route those cases to human reviewers who label them and produce corrected responses. This targeted labeling reduces annotation cost and accelerates improvement in the most critical areas. Periodically evaluate models on holdout sets and monitor for regressions that could harm customer experience.

Finally, apply feature engineering that captures conversation dynamics. Include features such as time to first response, number of clarifying questions, sentiment trend, and number of backend calls. These features help models differentiate between fast simple fixes and interactions that will require escalation, improving decision quality over time.

Operational Playbook and Governance

Operationalizing autonomous agents requires a governance framework that aligns cross functional teams on objectives, roles, and success criteria. Start by forming a core operating committee with representatives from customer service, legal, security, data science, and product management. This group signs off on scope, escalation rules, and rollout plans.

Define service level objectives that reflect both speed and quality. Typical SLOs include target resolution rates for autonomous interactions, maximum acceptable escalation latency, and minimum CSAT for interactions handled autonomously. Tie part of the human and AI team incentives to these SLOs to ensure shared responsibility. Measure both absolute performance and delta from baseline to capture incremental value.

Run pilots with clear guardrails. Use feature flags and canary releases to limit the agent to a subset of customers or channels. Monitor key health metrics such as false positive escalation, failed backend calls, and customer complaint trends. Maintain a rapid rollback plan that includes communication templates for customers and internal stakeholders in case of a broad customer impact.

Regularly audit performance and policy compliance. Schedule periodic reviews where the committee examines representative transcripts, model decisions, and incident reports. Rotate members of the review team to maintain objectivity and surface systemic issues. Use these audits to refine policy modules and to prioritize technical debt for the engineering team.

Key Metrics and Measuring Impact

To demonstrate the benefits of autonomous AI agents you must measure outcomes that matter to the business. Start with primary metrics such as CSAT, first contact resolution, average handle time, and cost per contact. Track these metrics both for autonomous handled interactions and for interactions that required human escalation.

Secondary metrics help diagnose issues. Monitor confidence distribution, escalation reasons, repeat contacts, and completion rate for agent initiated actions. Segment metrics by channel, customer tier, and interaction type to spot areas where the agent performs well and where additional training or policy work is needed.

A recommended approach is to use an impact matrix. On the x axis list interaction complexity, and on the y axis list volume. Prioritize high volume, low complexity cells for full automation. For medium complexity, low volume cells consider assisted automation. This prioritization ensures you capture the most immediate benefits of autonomous AI agents while managing risk.

Finally, compute business value using a simple cost model. Quantify savings from deflected contacts, reduced training needs, and increased agent productivity. Combine quantitative savings with qualitative benefits such as faster response times and improved consistency to build the case for scaling. Present results in a way that links agent performance to revenue retention and lifetime value improvements.

Implementation Roadmap and Practical Steps

Begin with discovery and process mapping. Identify the top interaction categories by volume and business impact. For each category, document the current workflow, data dependencies, policy constraints, and known failure modes. This mapping will inform the technical scope and the connectors you need to build.

Next run a feasibility assessment. For each mapped category, evaluate whether the interaction can be resolved with scripted logic, needs generative language capabilities, or requires backend orchestration. Estimate development effort and potential risk. Prioritize categories with clear success criteria and low regulatory complexity.

Build a minimum viable agent for one channel and one use case. Implement clear acceptance criteria, including target CSAT, maximum escalation rate, and response accuracy thresholds. Run the pilot with a small set of customers and collect both quantitative metrics and qualitative feedback. Use the pilot to refine intent models, escalate rules, and conversation templates.

After validating the pilot, scale horizontally to additional use cases and channels. Maintain a strong governance cadence during scale up to ensure policy alignment and to manage capacity for human reviews. Invest in automation for testing and monitoring so that each new capability can be deployed with confidence.

Change Management and Agent Adoption

Technical success is not enough without people adoption. Communicate transparently with frontline teams about the role of autonomous agents and how they will complement human work. Provide training that helps employees understand agent behavior and teaches them how to handle escalations efficiently.

Create feedback channels where human agents can flag problematic interactions and suggest improvements. Reward teams for collaborating with AI systems to improve customer outcomes. Highlight success stories where agents improved service speed or freed humans to resolve complicated issues that led to customer renewals.

Measure employee sentiment alongside customer metrics. A positive experience for human agents accelerates adoption and reduces resistance. Interpret agent adoption as part of a broader workforce transformation that includes training, role redesign, and performance management adjustments.

Risks, Mitigations, and Ethical Considerations

Deploying autonomous agents introduces risks such as incorrect advice, data exposure, and customer frustration. Mitigate these risks with layered defenses. Use conservative confidence thresholds, mandatory human review for high risk operations, and real time monitoring for anomalous behavior. Create incident response playbooks that include immediate rollback and customer communication steps.

Ethical considerations include transparency and user consent. Inform customers when they are interacting with an autonomous agent and provide an easy option to reach a human. Respect customer preferences for communication channels and data use. When models improve over time, communicate relevant changes to stakeholders so expectations remain aligned.

Finally, address bias and fairness. Test agents across demographic and linguistic segments to ensure equitable performance. Maintain representative training datasets and evaluate performance gaps regularly. When disparities are identified, prioritize corrective labeling and model retraining rather than relying on after the fact adjustments.

Conclusion

Autonomous AI agents offer a meaningful pathway to improve customer service outcomes by handling routine tasks at scale while enabling human agents to focus on higher value work. The benefits of autonomous AI agents are wide ranging and include reduced operational costs, faster response times, improved consistency, and the ability to scale support coverage without linear increases in headcount. Realizing these benefits requires discipline in design, a modular architecture, robust governance, and continuous learning frameworks. By starting with narrow, well defined use cases and expanding through data informed iterations organizations can manage risk while delivering measurable value.

Successful deployments emphasize controlled autonomy. Define clear scope and escalation rules, instrument the system for explainability and auditing, and integrate human reviewers early in the learning loop. Operational playbooks that align service level objectives across teams ensure that autonomous agents remain focused on outcomes that matter, such as first contact resolution and CSAT improvement. These playbooks should also codify policies as code and maintain immutable logs that support both compliance and continuous improvement efforts.

Measurement is central to scaling. Track both primary indicators like CSAT, handle time, and escalation latency and secondary diagnostics such as model confidence, repeat contact rates, and sentiment dynamics. Use an impact matrix to prioritize work that maximizes return on effort. Complement quantitative analysis with qualitative review sessions and audits so you capture edge cases and emerging risk patterns early.

Finally, do not underestimate the human and organizational elements. Communicate changes clearly to frontline teams, incorporate their feedback into model refinement, and align incentives so that humans and agents cooperate effectively. When organizations treat autonomous agents as partners that augment human capabilities rather than replacements, they unlock the full potential of automation to elevate customer experience.

If you follow the principles and practical steps in this playbook you can harness the benefits of autonomous AI agents to deliver faster, more reliable customer service that improves satisfaction while controlling cost. The journey requires cross functional collaboration, well structured experiment cycles, and a commitment to responsible deployment, but the operational and strategic gains make it a compelling investment for service organizations ready to scale.