In today’s fast paced business landscape teams must deploy dynamic workflow optimization to maintain agility and efficiency as requirements shift. This blueprint outlines a concrete production ready path for implementing continuous model driven workflow optimization that adapts to changing business signals without interrupting live operations. The A.I. PRIME approach focuses on measurable objectives pragmatic tooling and clear operational roles so teams can deploy dynamic workflow optimization with minimal risk and maximum business impact. Learn more in our post on Template Library: Ready‑to‑Deploy Agent Prompts and Workflow Blueprints for Q3 Initiatives.

The goals are simple but demanding. You must deploy dynamic workflow optimization that observes performance in real time learns from business events updates models and applies safe policy changes to live workflows. The method in this blueprint covers architecture decisions data pipelines model lifecycle controls testing and deployment strategies that reduce regressions and accelerate value delivery. By combining rule based safety layers with model informed recommendations you can deploy dynamic workflow optimization that is both autonomous and auditable.

This introduction sets expectations for CTOs platform engineers and automation leads. The following sections provide step by step guidance templates for components required and a playbook for rollout monitoring and governance. Read on to discover how to turn model driven insights into safely applied workflow changes and how to measure the business outcomes of every optimization you deploy.

Why deploy dynamic workflow optimization now

Deploy dynamic workflow optimization to move from static automation to adaptive orchestration that reacts to actual business signals. Static workflow rules break down as volume patterns shift seasonal events occur or new integrations change latency and error characteristics. When you deploy dynamic workflow optimization you enable workflows to evolve continually in production based on fresh telemetry and outcomes. This reduces manual tuning and keeps automation aligned with business goals. Learn more in our post on Continuous Optimization: Implement Closed‑Loop Feedback for Adaptive Workflows.

There are three core motivations for teams that decide to deploy dynamic workflow optimization. First it improves throughput and cost efficiency by reducing wasted steps and rework. Second it enhances customer experience because decision points can adapt to live context. Third it enables leaders to measure the impact of changes through data driven experiments rather than intuition. Each motivation maps to observable metrics making it easier to justify investment in model driven optimization.

Adopting this capability also changes organizational rhythm. Teams must shift from one off rule updates to a continuous improvement loop that blends SRE practices AIOps and product analytics. That shift requires new roles and clearly defined service level objectives. When you deploy dynamic workflow optimization successfully you will see faster cycle times fewer exceptions and improved alignment between automation and business outcomes.

Core components of the A.I. PRIME blueprint

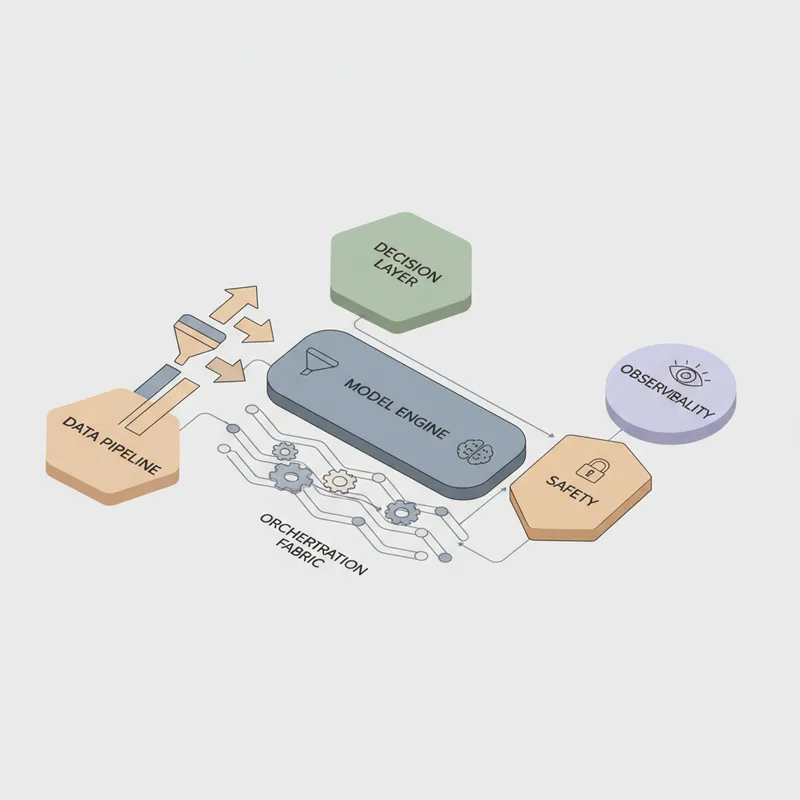

The A.I. PRIME blueprint decomposes deploy dynamic workflow optimization into modular components so you can iterate safely. The six core components are data pipeline model engine decision layer orchestration fabric safety and auditing and the observability stack. Each component has a clear responsibility and standard interfaces so teams can adopt parts progressively and avoid big bang migrations. Learn more in our post on Market Map: Top Agentic AI Platforms and Where A.I. PRIME Fits (August 2025 Update).

Data pipeline is the foundation. It ingests events from the orchestration fabric enriches them with contextual data persists a feature store and emits labeled examples for model training. To deploy dynamic workflow optimization you must ensure low latency and reliable delivery of data while maintaining schema versioning. Strong data contracts reduce surprise regressions when models begin influencing production behavior.

The model engine hosts predictive and prescriptive models that convert features into optimization signals. Some signals are continuous scores others are discrete action recommendations. The model engine must support online and offline training validation and a fast inference API. When you deploy dynamic workflow optimization include shadow mode inference so model outputs can be measured against existing behavior before any control plane change is applied.

The decision layer translates model outputs into actionable rules. It contains policy enforcement rate limiters and fallbacks so that automated changes remain within acceptable bounds. To deploy dynamic workflow optimization safely you should implement a human in the loop escalation path and conservative default policies that ensure business continuity even when models drift or fail.

The orchestration fabric executes the workflows and must expose hooks for metrics collection and for applying optimization signals at runtime. This layer should support feature toggles canary releases and traffic splitting so optimization strategies can be evaluated incrementally. Ensuring the orchestration fabric is observable and extensible makes it much easier to deploy dynamic workflow optimization without invasive changes to business logic.

Safety and auditing are non negotiable. Every decision must be traceable back to model inputs and policy rules. Audit logs should record the version of models used the decision path and the exact changes applied to the workflow. When you deploy dynamic workflow optimization embed audit hooks and provide tooling for investigators to reproduce decisions in a test environment.

The observability stack ties everything together by collecting metrics traces and logs and by offering dashboards and alerting that map system behavior to business outcomes. Observability enables continuous validation of your optimizations so teams can detect unintended regressions quickly. When you design this stack you must define key performance indicators that reflect user experience operational cost and risk.

Step by step deployment plan

To deploy dynamic workflow optimization follow a staged plan that reduces risk and accelerates feedback. The plan is divided into five phases: discovery and objectives, data readiness, model development and validation, safe rollout and automation, and continuous improvement. Each phase contains tangible deliverables and exit criteria so you can measure readiness before progressing.

Phase 1: Discovery and objectives. Start by aligning stakeholders on the target metrics and the value hypothesis for optimization. Identify the workflows with the highest opportunity by looking for manual tuning overhead high exception rates or high cost per transaction. Define success criteria and select a small set of measurable objectives to optimize initially. This focused scope helps you deploy dynamic workflow optimization faster and demonstrate value.

Phase 2: Data readiness. Build reliable event capture and storage. Define feature schemas and implement a feature store or a version controlled dataset repository. Ensure you have labeled outcomes for supervised learning and plan for instrumentation that will capture new labels created by automation changes. Validate data quality and completeness. Without robust data you cannot reliably train models that will be safe to deploy.

Phase 3: Model development and validation. Train baseline models and evaluate them using offline experiments. Use shadow inference against live traffic to collect comparative metrics without changing behavior. Implement model explainability tooling to surface feature importance and decision rationales. Before you deploy dynamic workflow optimization ensure models meet predefined performance thresholds and that there are strategies for drift detection.

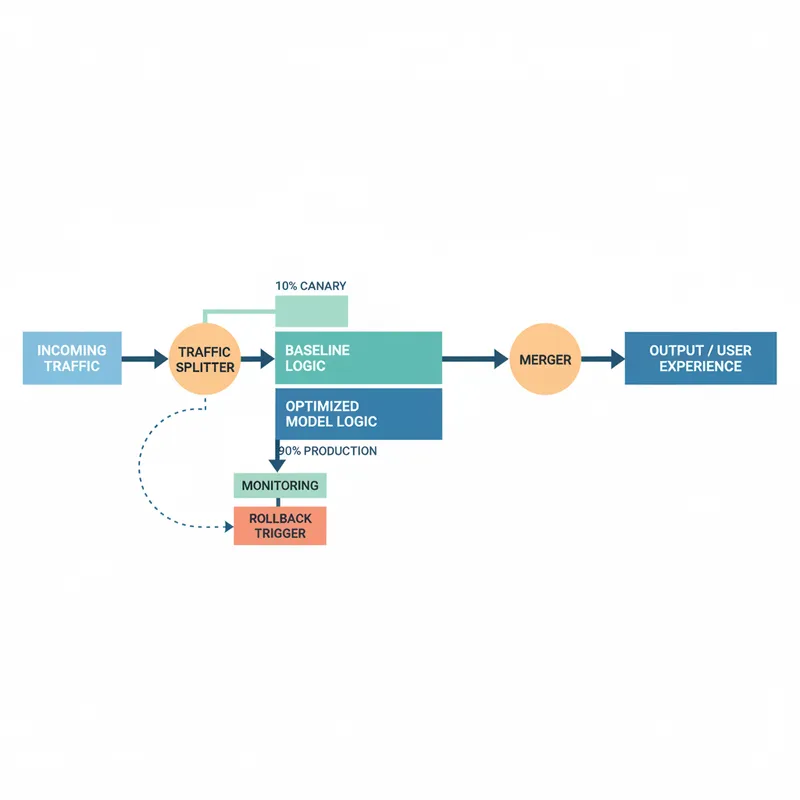

Phase 4: Safe rollout and automation. Start with a canary release or a passive recommendation mode where operators receive model suggestions but decisions are still manual. Gradually move to partial automation using traffic splitting or rule gating. Enforce hard safety limits in the decision layer such as maximum change per hour and predefined rollback triggers. This incremental approach helps teams gain confidence and reduces blast radius.

Phase 5: Continuous improvement. Once automation is operating reliably turn on continuous learning pipelines that retrain models using fresh labeled data. Apply controlled experiments to test new model versions or policy changes. Maintain a cadence of post mortems and root cause analysis for any regression. Continuous improvement is the core promise when you deploy dynamic workflow optimization so invest in processes that sustain it.

Design patterns for resilient implementation

Adopt proven design patterns to increase the resilience and maintainability of your optimization system. Patterns such as shadow mode inference circuit breakers progressive rollouts and multi model ensembles are effective. When you deploy dynamic workflow optimization combine these patterns with strong testing and staging practices.

Shadow mode inference allows you to measure model impact without changing behavior. Use it extensively to build confidence in your models and to collect real world performance metrics. Circuit breakers provide an emergency stop that can isolate the model decision path if anomalies occur. They should be triggered by well defined health metrics and rollback policies.

Progressive rollouts such as canary deployments and traffic splitting let you test changes on a subset of traffic. This reduces risk and provides an empirical basis for scaling automation. Multi model ensembles and fallback models increase robustness. If the primary model is uncertain or produces low confidence predictions a simpler rule based fallback can maintain continuity while the model system recovers.

Finally implement strong integration tests that simulate production scale inputs and expected outputs. Include synthetic load and long running scenario tests. A robust testing culture is essential if you plan to deploy dynamic workflow optimization at scale because automated decision changes can amplify issues quickly if they are not properly validated.

Data strategy and model management

A practical data strategy is central to your ability to deploy dynamic workflow optimization continuously. Plan for scalable storage schema versioning and a feature catalog that captures lineage and provenance. Ensure that your dataset labeling approach covers edge cases and rare events so models do not learn biased behavior. Strong feature governance prevents silent shifts that degrade model performance.

Model management includes versioning staging and retraining pipelines. Build CI CD for models that runs unit tests performance regressions and fairness checks automatically. Maintain a model registry that records metadata such as training data snapshot hyper parameters validation metrics and approved deployment environments. When you deploy dynamic workflow optimization rely on this registry as the source of truth for production models.

Implement automated drift detection that monitors input feature distributions prediction distributions and outcome distributions. Alert when divergence crosses thresholds and automatically trigger retraining workflows or human review. Drift detection is a proactive control that reduces surprise failures after you deploy dynamic workflow optimization.

Another important aspect is reproducibility. Capture randomized seeds training artifacts and environment dependencies so you can reproduce any model result. Reproducibility supports audits and investigations after an incident and is required when you need to explain why a production decision was made based on a particular model version.

Monitoring feedback and continuous adaptation

Monitoring is about more than uptime. To deploy dynamic workflow optimization you need holistic observability that connects system signals to business outcomes. Build dashboards that map model confidence thresholds to conversion metrics error rates and latency. Create composite SLOs that include both technical and business signals so operational teams have a single source of truth.

Feedback loops are what make optimization continuous. Capture outcome labels automatically where possible for example success or failure markers associated with each workflow instance. Use these labels to retrain models and to run counterfactual analyses. The faster you can close feedback loops the quicker the system will converge toward better decisions.

Design automated experiments to validate that changes improve defined metrics before full rollout. A/B testing traffic splitting and uplift modeling are effective techniques. Where experiments are not possible implement conservative automatic rollbacks based on statistical control charts so you can maintain safety even when operating under uncertainty.

Operationalize incident response with runbooks that include model specific recovery steps. When you deploy dynamic workflow optimization include a path for quick model isolation or reversion to a known good version. Train on these procedures and run periodic drills so that the organization can respond swiftly if optimization behavior goes off track.

Governance compliance and ethical considerations

Governance shapes responsible adoption. Establish policies that define acceptable automation boundaries performance requirements and review cadences. Role based approvals and change windows help manage risk in regulated environments. When you deploy dynamic workflow optimization ensure policies explicitly cover model retraining frequency and the criteria for automated changes.

Compliance requires maintaining explainability and audit trails. Keep model explanations linked to production logs and ensure that reviewers can reconstruct decision paths for any automated change. Privacy requirements must be honored in data pipelines by minimizing sensitive data retention and by applying access controls and encryption. These controls are essential when you deploy dynamic workflow optimization at enterprise scale.

Ethical considerations include bias monitoring and fairness checks. Evaluate models against diverse population slices and monitor for disparate impact. Implement thresholds that prevent automated decisions in cases where fairness metrics degrade. Combining technical controls with human review ensures that your optimization program advances business goals without introducing harm.

Operational roles playbook

Successful deployment depends on people and processes as much as technology. Define clear roles and responsibilities including automation owner model steward data engineer and incident commander. Each role has specific duties across the lifecycle of a model driven optimization program from data collection to post deployment monitoring.

Automation owner coordinates business objectives and prioritizes workflows for optimization. The model steward manages model validation lineage and releases. Data engineers ensure the event pipeline and feature store operate reliably. Platform engineers maintain the orchestration fabric and deployment pipelines. These roles collaborate on runbooks and incident handling so everyone knows how to respond when anomalies occur.

Establish a governance board that meets regularly to review performance metrics model changes and open risks. This board should include representatives from product operations security and compliance. By assigning concrete responsibilities and decision authorities you can deploy dynamic workflow optimization with confidence knowing that trade offs and risks are managed transparently.

Cost modeling and ROI measurement

Quantifying the value of optimization is essential to sustain investment. Build a cost model that accounts for compute inference costs data storage pipeline maintenance and engineering effort. Compare these costs against expected savings from reduced manual work improved throughput and lower error remediation. Use simple unit economics to show marginal benefits per optimized transaction.

Measure ROI using incremental lift experiments. Track conversion rate improvements service level improvements and cost per transaction trends. When you deploy dynamic workflow optimization include attribution models that separate model driven impact from other changes in the environment. Clear attribution avoids overclaiming and supports continuous funding for the program.

Also track operational risk as a cost. Include expected loss from potential regressions and the cost to remediate incidents. Incorporating risk makes trade offs explicit and helps leadership decide acceptable automation levels versus conservative manual review.

Image suggestion

Migration and scaling strategies

Begin small and expand. Migrate one high priority workflow at a time and treat each migration as a learning opportunity. When you deploy dynamic workflow optimization start with non critical workflows to build expertise and refine processes. Use migration checklists that include data validation model thresholds and rollback criteria.

As you scale introduce shared services such as a centralized model registry feature store and a decision API to avoid duplicated effort. Centralization reduces integration friction and helps maintain consistency across teams. Provide SDKs and clear API contracts so development teams can adopt optimization capabilities without deep platform knowledge.

Performance tuning at scale includes horizontal scaling of inference services and batching strategies to reduce cost. Use autoscaling and priority queues to ensure SLAs for real time workflows while offloading non urgent inference to batch processes. Scaling efficiently allows you to deploy dynamic workflow optimization across many processes without linear cost increases.

Common pitfalls and how to avoid them

Several pitfalls recur in optimization projects. Overengineering the model before data quality is addressed is common. To avoid that focus first on strong instrumentation and simple baseline models that establish measurable improvements. Another pitfall is insufficient safety controls. Always deploy conservative policy limits and fallback options before enabling automated changes.

A third pitfall is lack of cross functional ownership. If teams view optimization as a siloed task it will stagnate. Build cross functional teams that include product analytics engineers and operations staff. This shared ownership fosters quicker iteration and better alignment with business goals. Finally avoid ignoring ethical implications. Regularly review fairness and privacy impacts and bake remediation into your release process.

Checklist for first 90 days

To help teams move from planning to action here is a practical 90 day checklist for organizations ready to deploy dynamic workflow optimization. Day 1 to 30 focus on discovery data contracts and instrumentation. Day 31 to 60 build initial models run shadow inference and set up observability. Day 61 to 90 perform canary rollouts enable partial automation and establish retraining pipelines. Each milestone should have clear acceptance criteria and a rollback plan.

Example deliverables for day 1 to 30 include target metric definitions event schema prototypes and a feature store proof of concept. For day 31 to 60 deliverables include a validated baseline model a shadow mode report and runbooks for incident response. For day 61 to 90 deliverables include a canary rollout report production dashboards and automated retraining pipelines. Completing these milestones positions teams to scale and to continue refinement after the initial deployment.

Conclusion

Deploy dynamic workflow optimization to transform static automation into resilient adaptive orchestration that continuously improves as business signals change. The A.I. PRIME blueprint provides a practical path through planning data readiness model management safe rollout and governance so teams can realize measurable gains while maintaining safety and compliance. This approach balances autonomy with control by combining model driven insights with policy enforced decision making and human oversight.

Organizations that embrace this blueprint will benefit from faster reaction times to market signals reduced operational costs and improved customer outcomes. The most successful programs start with high impact use cases invest in robust data pipelines and adopt incremental rollouts that prioritize safety. Continuous monitoring feedback loops and reproducible model management are non negotiable elements that ensure durable results. By defining clear roles and by operationalizing runbooks and governance you can reduce friction and create a sustainable optimization practice.

As you deploy dynamic workflow optimization remember that technology is only part of the equation. Culture process and accountability determine how effectively optimization knowledge is applied. Equip teams with the right tools and responsibilities and focus on measurable improvements. Start small validate often and scale what works. Over time your organization will evolve from reactive manual tuning to a mature program where optimization is part of the daily operational rhythm.

Finally prioritize transparency and traceability. Maintain audit trails link model explanations to production logs and provide stakeholders with clear reports on impact and risk. These practices build trust and enable the program to expand across more workflows. When done correctly deploying dynamic workflow optimization becomes a strategic capability that enables continuous efficiency and measurable business value while preserving safety and compliance.