Intro

Dynamic workflow optimization is transforming how organizations route work, allocate resources, and make decisions in real time. Rather than settling for static rules or periodic manual adjustments, teams can adopt systems that continuously tune themselves based on live performance data, changing demand, and evolving business priorities. The result is higher throughput, lower operational cost, and faster adaptation to new requirements. This article explains the core principles, technical building blocks, and practical steps to deploy dynamic workflow optimization across back office and customer facing operations. It offers actionable guidance for leaders who need to move from pilot projects to production scale and maintain continuous improvement over time. You will learn how to measure success, mitigate common risks, and create feedback loops that turn operational data into smarter routing, smarter allocation of personnel and machines, and smarter decision logic that aligns with business goals.

What is dynamic workflow optimization and why it matters

Dynamic workflow optimization is an approach that shifts workflow management from fixed process definitions to adaptive systems that react to real world signals. Traditional workflow systems use predefined routing paths and static resource assignments that must be updated manually. Dynamic workflow optimization, by contrast, uses data, analytics, and automation to modify routes, priorities, and resource use continuously so workflows remain efficient and resilient. Learn more in our post on Deploying Dynamic Workflow Optimization: A.I. PRIME's Implementation Blueprint.

The business value is clear. When routing, resource allocation, and decision logic update continuously, organizations can process more work with the same or fewer resources. That increases throughput, reduces cost per transaction, and improves customer experience. The technique fits many operational domains including document processing, claims handling, order fulfillment, IT service management, and customer support. It is especially powerful where volumes fluctuate, task complexity varies, and multiple constraints compete for the same resources.

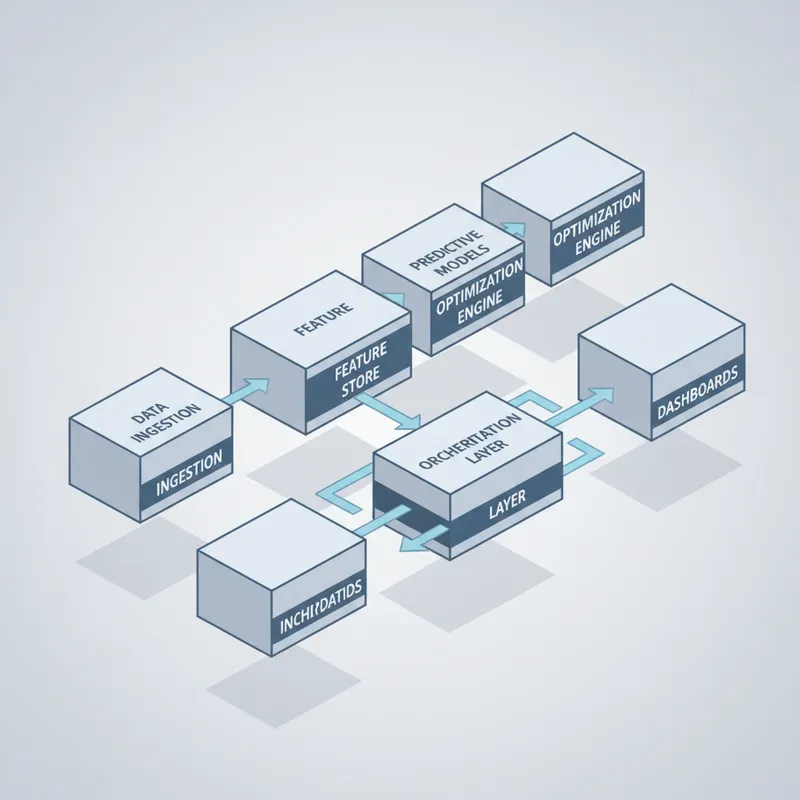

At the technical level, dynamic workflow optimization combines real time telemetry, predictive models, optimization engines, and orchestration layers. Together these components evaluate current conditions, predict near term demand, and select routing or resource assignments that meet business objectives such as minimizing latency, balancing workload, reducing cost, or maximizing quality. Continuous learning and feedback loops close the gap between planned behavior and actual outcomes, enabling gradual improvement without frequent manual intervention.

Core components: routing, resource allocation, and decision logic

Dynamic workflow optimization centers on three interdependent capabilities: routing, resource allocation, and decision logic. Each area needs dedicated models, metrics, and controls. They also need to operate in concert so decisions in one area do not create bottlenecks in another. Learn more in our post on Augmented Ops: How Agentic AI and Human Teams Should Share Decision Rights in 2025.

Routing involves deciding where work should go at each step. With dynamic workflow optimization, routing is context aware. It considers factors like case complexity, required skills, current queue lengths, service level agreements, and historical performance of teams. Instead of routing based solely on fixed rules, the system may route an order to a specialized team during peak hours or split a complex case among multiple agents for parallel processing. Routing decisions can be deterministic, probabilistic, or based on optimization outputs that trade off multiple objectives.

Resource allocation is about matching available capacity to current and forecast demand. In dynamic workflow optimization, allocation goes beyond simple scheduling. It forecasts near term volume and assigns personnel, machines, or cloud resources to optimize throughput and cost. Allocation strategies may include flexible shift assignments, dynamic staffing adjustments, temporary reallocation of specialized resources, and intelligent use of automation to offload repetitive tasks. Resource allocation must also reflect constraints such as skill certifications, labor agreements, and equipment availability.

Decision logic defines the rules and models that guide automated choices inside a workflow. It includes business rules, predictive models, and policy constraints. Decision logic in a dynamic system adapts over time by ingesting outcome data and retraining predictive models. For example, a decision logic component might automatically adjust escalation thresholds based on recent error rates or change fraud screening intensity depending on observed risk signals. The ability to evolve decision logic without lengthy development cycles is a hallmark of mature dynamic workflow optimization deployments.

Key cross functional elements that link routing, allocation, and decision logic include event streaming to capture state changes, a feature store for consistent model inputs, and an optimization service that balances competing objectives. Governance and audit trails are also essential so human teams can validate automated decisions and meet compliance requirements.

Essential metrics to drive continuous tuning

Successful dynamic workflow optimization relies on carefully chosen metrics. These metrics guide the optimization engine and provide signals for continuous tuning. Typical metrics include throughput, cycle time, first time resolution rate, cost per transaction, and service level attainment. Additional metrics such as model drift, prediction accuracy, and routing success rate help detect when retraining or policy changes are needed.

- Throughput: Number of cases processed per unit time.

- Cycle time: Time from case creation to completion.

- Cost per transaction: Total operational cost divided by processed cases.

- Quality measures: Error rate, rework rate, or customer satisfaction.

- Model performance: Accuracy, precision, recall, and drift metrics.

Regularly monitoring these metrics and feeding them back into the optimization loop allows the system to self adjust. When the system identifies a sustained change in demand patterns or a drop in model accuracy, it can trigger retraining, change allocation logic, or reroute work to maintain service levels and cost targets.

Design patterns and architecture for deployment

Designing for dynamic workflow optimization requires an architecture that supports real time decisioning, analytics, and safety controls. Modular, API driven architectures are well suited. At a high level, common architecture layers include data ingestion, analytics and modeling, optimization engine, orchestration layer, and user interfaces for monitoring and manual overrides. Learn more in our post on Template Library: Ready‑to‑Deploy Agent Prompts and Workflow Blueprints for Q3 Initiatives.

Data ingestion should capture events from all relevant systems including task management platforms, telephony systems, sensors, and transactional databases. Event streams or change data capture provide the low latency signals that enable timely adjustments. A feature store or centralized data service standardizes inputs so predictive models see consistent data regardless of where the events originate.

The analytics and modeling layer hosts prediction services and simulation tools. Predictive models forecast short term demand, estimate task handling times, and score cases for risk or priority. Simulation helps evaluate potential routing and allocation policies before applying them to production traffic. Models should be versioned and instrumented so performance can be compared across releases.

The optimization engine solves multi objective problems such as maximizing throughput while minimizing cost and maintaining quality thresholds. It can use techniques ranging from linear programming to heuristic search or reinforcement learning depending on scale and complexity. Importantly, the engine should expose explainable recommendations so operators can understand trade offs and approve changes if required.

The orchestration layer executes routing and allocation decisions. It translates recommendations into actions across multiple systems. This layer needs robust error handling and transactional integrity to avoid inconsistent states. User interfaces and dashboards provide human operators visibility into system behavior and controls for manual intervention, A feature that supports what we call human in the loop operations is essential for gradual trust building and governance.

Finally, governance and audit layers record decisions, inputs, and outcomes. These logs are necessary for compliance, root cause analysis, and continuous improvement. Clear ownership of models and decision policies makes governance practical at scale.

Implementing continuous tuning and feedback loops

Continuous tuning is the mechanism that turns analytics and automation into sustained performance gains. A well designed feedback loop captures outcomes, analyzes variance from expected behavior, and applies corrective action. Implementing such a loop requires instrumentation at every step of the workflow and a discipline of small, frequent adjustments rather than infrequent large changes.

Start by instrumenting outcomes and intermediate states. If a case moves from intake to review to resolution, capture timestamps for each state, the resources involved, and any decisions applied. Combine these signals with quality indicators such as error flags or customer feedback. This comprehensive telemetry is the raw material for both supervised model training and unsupervised anomaly detection.

Next, define experiments and validation criteria. Use controlled rollouts when changing routing rules or retraining models. A typical pattern is to run a new policy on a small portion of traffic and compare key metrics against a control group. Statistical tests or Bayesian methods can determine whether observed differences are meaningful. This approach reduces the risk of unexpected regressions while providing rapid feedback.

Automate routine adjustments where possible. For example, when predicted queue depth crosses a threshold, the system can automatically reassign flexible staff or shift lower priority work to automation. More significant changes, such as new allocation policies that affect costs, can require operator approval. The balance between automation and human oversight will vary by industry and risk tolerance.

Finally, cultivate a learning culture. Teams should review performance reports regularly, share lessons learned, and update models and policies accordingly. Continuous tuning becomes sustainable when the organization treats it as an operational discipline rather than a one off project.

Practical techniques for tuning

- Adaptive thresholds: Replace fixed thresholds with dynamic thresholds that adapt to seasonality and trend.

- Context aware priority: Dynamically reweight priorities based on downstream impacts such as SLA risk or customer value.

- Model ensemble: Combine multiple predictive models to reduce variance and improve robustness.

- Fast retraining pipelines: Automate retraining with frequent data windows and evaluation gates.

- Shadow testing: Run new optimization logic in parallel with production to compare decisions without affecting live traffic.

These techniques help the system remain responsive and aligned with evolving objectives. They also make it easier to quantify improvements and attribute gains to specific changes.

Technology choices and integration considerations

Choosing the right technologies for dynamic workflow optimization depends on scale, latency requirements, and existing infrastructure. Core capabilities include data streaming, model hosting, optimization algorithms, orchestration, and observability. Cloud native services offer managed components for many of these needs, but on premise or hybrid deployments remain common in regulated environments.

Model hosting needs to support low latency inference and version management. A model that predicts task duration in milliseconds enables finer grained routing decisions. Feature consistency across training and runtime is critical to prevent performance surprises. Use a feature store or robust data pipeline to ensure training and inference data align.

Optimization engines vary. Linear programming works well for assignment problems with clear constraints and linear costs. For highly dynamic or combinatorial problems, heuristic methods or reinforcement learning may yield better long term trade offs. Start with simpler methods and add complexity as you validate benefits. Explainability is easier with simpler models and is often necessary for auditability.

Integrations with existing systems are essential. The orchestration layer needs to call out to task management systems, scheduling tools, and automation platforms. Designing these integrations with idempotent APIs and clear compensation actions reduces risk. Event driven architectures help decouple components and allow parts of the system to fail gracefully while retaining consistency.

Observability and monitoring deserve special attention. Operational dashboards should show not only business KPIs but also model metrics, optimization decision rates, and incident indicators. Alerting should trigger when models drift, when throughput dips unexpectedly, or when constraint violations occur. Built in canaries and automated rollback mechanisms protect production performance.

Security and compliance considerations

Dynamic decision systems may process sensitive data and must comply with privacy and industry regulations. Secure data handling, access controls, and audit trails are non negotiable. Anonymize or pseudonymize personal data where possible for model training. Where decisions impact customer outcomes, expose human review and appeal processes. Maintain comprehensive logs so decisions can be reconstructed for audits.

Measuring impact: throughput, cost, and quality

Measuring impact is both technical and managerial. To claim success, organizations should quantify improvements in throughput, cost savings, and quality gains attributable to dynamic workflow optimization. Establishing causal links requires controlled experiments, solid baseline measurements, and careful attribution. Typical approaches include A B testing, pre post analysis with seasonality adjustments, and synthetic controls.

Throughput increases when optimized routing and allocation reduce idle time and accelerate processing. Express throughput improvements in relative terms and absolute units such as additional cases processed per hour. Cost reductions may come from lower overtime, better utilization of automation, or fewer escalations. Capture both direct cost savings and indirect benefits such as improved customer retention.

Quality metrics protect against optimization that sacrifices outcomes for efficiency. Monitor error rates, rework frequency, dispute counts, and customer satisfaction scores. When implementing dynamic optimization, add guardrails that prevent cost saving actions from degrading quality beyond acceptable bounds. The optimization engine can include quality constraints that it must respect during decision making.

Another useful lens is time to detect and time to correct. These metrics measure how quickly the system identifies performance regressions and how rapidly it applies corrective changes. Shorter detection and correction cycles indicate a more mature continuous tuning capability and typically lead to sustained improvements.

Case studies and hypothetical scenarios

Concrete examples help illustrate how dynamic workflow optimization produces business impact. Consider a document processing center that used to assign batches in a round robin manner. By implementing an adaptive routing layer that estimates processing time per batch and assigns batches to teams based on current queue depth and specialist skills, the center increased throughput by 35 percent and reduced average cycle time by 28 percent. The system included continuous retraining so models remained accurate as document types changed.

Another scenario involves an IT service desk that experiences large variability in ticket inflow. A dynamic workflow optimization system predicted near term peaks and automatically shifted staff capacity, enabled temporary task reassignment, and prioritized tickets based on business impact. The result was fewer SLA breaches and a noticeable drop in average handling cost as overtime and escalation rates fell.

In a more automation centric example, a claims handling process used dynamic decision logic to escalate ambiguous claims to human adjudicators while routing routine claims to automated processors. The system continuously refined its ambiguity classifier and routing thresholds based on downstream error rates. This approach reduced manual workload by 50 percent while maintaining adjudication quality.

These scenarios share common success factors: clear metrics, incremental rollout, strong telemetry, and a culture of continuous improvement. Each also demonstrates how improvements in routing, allocation, and decision logic interact to drive throughput and cost benefits.

Challenges, trade offs, and risk mitigation

Deploying dynamic workflow optimization is not without challenges. Data quality issues can undermine model performance. Organizational resistance to automated decisions can slow adoption. Misconfigured optimization objectives can lead to unintended behaviors such as gaming the system or focusing on easy wins that harm long term value. Recognizing and managing these risks is critical.

Mitigate data quality issues by investing in data validation, lineage tracking, and a feature store that enforces consistent preprocessing. Prevent harmful optimization by including multi dimensional objectives and constraints in the optimization problem. For example, require minimum quality levels and balanced workload distribution as hard constraints rather than soft incentives.

Change management is equally important. Give teams visibility and control through dashboards, explainable recommendations, and staged rollouts. Provide training so operators understand how the system makes decisions and how to intervene when necessary. Establish clear governance that defines ownership of models, policies, and rollback procedures.

Finally, prepare for model degradation by building retraining and monitoring frameworks. Monitor not only business KPIs but also input distributions and model confidence. Set automated alerts when input features drift significantly or when models produce unusually low confidence levels. These signals should trigger investigation and potential retraining before performance deteriorates materially.

Roadmap for deployment and scaling

A pragmatic deployment roadmap moves from discovery to pilot to scale with continuous improvement as the ongoing operational mode. A recommended sequence of steps includes discovery, prototyping, pilot, controlled rollout, and enterprise scale. Each step has specific goals and artifacts that make the next step lower risk and higher value.

During discovery, map the target workflows, identify constraints, and collect baseline metrics. Use this phase to prioritize use cases by impact and feasibility. In prototyping, implement a minimally viable optimization capability focused on a narrow segment. Ensure the prototype emphasizes telemetry, basic predictive models, and a simple optimization rule set.

Pilots should validate value using live traffic and controlled experimentation. Keep pilots time boxed and supported by a cross functional team that includes operations, data science, and engineering. Use pilot learnings to harden data pipelines, add governance controls, and expand models.

Controlled rollout extends the solution to additional business units or geographies while maintaining monitoring and manual override capabilities. At scale, focus on operationalizing continuous tuning, automating retraining pipelines, and institutionalizing governance practices. Maintain a feedback loop between practitioners and model owners to keep optimization aligned with changing business priorities.

Invest in platform capabilities that reduce friction as you scale. A reusable feature store, model registry, and optimization templates accelerate new use cases. Standardized APIs and observability tools make integration and monitoring easier across diverse systems.

Organizational capabilities for sustained improvement

Technology alone does not guarantee success. Organizational capabilities such as a data driven culture, cross functional collaboration, and clear decision rights are essential. Build small cross functional teams that combine domain expertise, analytics, and engineering. Empower these teams to run experiments and own local metrics while aligning to enterprise level goals.

Leadership support enables funding and prioritization while governance ensures safe and compliant operation. Establish a center of excellence to capture best practices, templates, and reusable components. This center can also curate a library of validated models and policies so new deployments start from proven foundations.

Finally, reward continuous improvement. Recognize teams that use data to reduce cycle times, increase throughput, or lower costs while preserving quality. Celebrating incremental wins reinforces the discipline of ongoing tuning and experimentation that is central to dynamic workflow optimization.

Conclusion

Dynamic workflow optimization offers a practical path to continuous improvement by bringing together data, predictive models, optimization engines, and orchestration to tune routing, resource allocation, and decision logic in real time. The payoff is measurable. Organizations that implement continuous tuning can increase throughput, reduce cost per transaction, and maintain or improve quality. Success requires thoughtful architecture, strong telemetry, governance, and a culture that treats optimization as an ongoing operational capability rather than a one time project. Start with clear objectives and metrics so every change can be measured against business goals. Pilot in controlled conditions, validate improvements through experiments, and scale gradually while ensuring transparency and human oversight. Invest in automation for routine adjustments, but keep pathways for human review when decisions have high impact or regulatory implications.

Operational readiness depends on several building blocks. Reliable data ingestion and a feature store ensure models receive consistent inputs. Low latency model serving and an optimization engine enable timely decisions. Orchestration connects decisions to execution systems and provides rollback and compensation actions to protect consistency. Observability and monitoring detect model drift and performance regressions rapidly. Governance and audit trails provide accountability and support compliance. Together these pillars allow organizations to move from reactive manual changes to proactive, continuous optimization.

Finally, the organizational shift is as important as the technical design. Foster cross functional teams, document decision policies, and create feedback loops that turn daily operations into opportunities for learning and improvement. Reward experimentation, measure impact rigorously, and prioritize actions that preserve quality while improving efficiency. With the right combination of technology and organizational alignment, dynamic workflow optimization becomes a durable competitive advantage that adapts as business needs evolve. It empowers teams to handle growing volumes and complexity without a corresponding rise in cost. The long term value comes from systems that keep getting better, not from one time efficiency gains. Deploy with discipline and iterate with measurement so the system becomes a continuous engine of improvement that supports strategic goals while delivering tangible operational benefits.