Adopting autonomous systems at scale requires more than technical performance. Leadership teams need confidence that agentic systems behave as intended, can be audited, and can be explained in business terms. This post provides actionable guidelines to build explainable agentic AI that executives will approve for scaled deployment this quarter. It combines governance, design patterns, instrumentation, and communication tactics so you can present a clear plan, measurable controls, and repeatable audits. Learn more in our post on Custom Integrations: Connect Agentic AI to Legacy Systems Without Disruption.

Explainability is not a checkbox. It is an engineering and organizational discipline that touches model design, data lineage, decision logging, human-in-the-loop controls, testing, and executive reporting. The guidance below shows how to design explainable agentic AI that yields transparent decision trails, concise rationales, verifiable audits, and business metrics that match executive needs. Follow the step by step approach to reduce approval friction, accelerate pilots into production, and ensure leadership can sign off with clear acceptance criteria.

Why explainability matters to executives

Executives view agentic systems through a risk and value lens. They ask three core questions: Does it increase revenue or cut costs reliably? Does it create legal or reputational risk? Can we understand and control decisions made by the system? Building explainable agentic AI addresses all three questions by creating visibility into decision logic, documenting inputs and outputs, and providing audit trails that are understandable to non technical stakeholders. Learn more in our post on Future of Work Q3 2025: Agentic AI as the New Operations Layer.

Leaders also need to align AI behavior with corporate policy, industry regulations, and risk tolerance. Explainability simplifies that alignment because it surfaces the conditions under which a system acted and why it chose one action over another. With the right explanations, decision makers can validate that the system's behavior matches policy, and compliance teams can map outcomes to regulatory controls.

Finally, explainability is essential for operational monitoring. When an agent behaves unexpectedly, teams need rapid root cause analysis. Explainable agentic AI reduces mean time to resolution by giving engineers and business owners a clear sequence of reasoning steps, decision probabilities, and the data dependencies that led to the action. For executives, that translates into predictable operations and faster remediation when something goes wrong.

Core design principles for explainable agentic AI

Designing explainable agentic AI starts with principles that guide architecture, feature selection, and model choices. The first principle is to design for interpretability at every layer. Whenever you can use models or modules that provide native explanations, do so. When black box components are necessary, wrap them with explanation layers that summarize inputs, confidence, and contributing features. Learn more in our post on Cost Modeling: How Agentic AI Lowers Total Cost of Ownership vs. Traditional Automation.

The second principle is to separate decision orchestration from decision justification. Use an agentic control plane that receives tasks, decides which skills to invoke, and logs the orchestration decisions. Independently, produce a justification artifact that explains why the orchestration chose a path, including the alternatives considered and the scoring used. This separation lets auditors read concise justifications without parsing low level code or raw model traces.

The third principle is to capture provenance and data lineage. Every input, transformation, model version, external call, and supporting dataset should be recorded with timestamps and identifiers. Provenance enables reproducibility and supports counterfactual analysis. When an auditor asks whether a specific input produced a result, provenance lets you replay the exact chain that produced that decision.

The fourth principle is to enforce granularity in explanations. Offer multiple levels of explanation tailored to audiences. Executives need short, actionable rationales and business impact estimates. Engineers need detailed traces, tokens, and model confidence distributions. Compliance officers need mapping to policy controls and data retention logs. Build explanation interfaces that can present different depths without losing fidelity.

Practical patterns

Modular skill design: Build agentic skills as isolated capabilities with clear input and output contracts. Each skill emits a standardized explanation payload.

Decision scoring and alternatives: Always log top N alternatives with scores. That makes it possible to show what was considered and why an option was chosen.

Versioned models and prompts: Tag models, prompt templates, and system configurations with version metadata to support audits and rollbacks.

Confidence thresholds: Design fallback strategies for low confidence decisions and log the threshold logic used at runtime.

Instrumentation: Logging, metrics, and auditable trails

Explainable agentic AI requires disciplined instrumentation to create reliable audit trails. Instrumentation should capture the context of each decision, the chain of agentic operations, and the explanatory artifacts. Logs are the raw material for audits, so make them structured, searchable, and immutable for the retention period required by your policies.

Structured logs should include task identifiers, user or system initiator, input payload, normalized features, model version, timestamps for each action, top alternatives with scores, and the final action. Supplement logs with snapshots of relevant external data fetched during the decision. The goal is to be able to reconstruct the decision at any later point with confidence.

Beyond logs, build metrics that are meaningful to leadership. Focus on business level key performance indicators as well as safety and reliability metrics. Examples include success rate versus target, time to resolve exceptions, proportion of human interventions, and distribution of confidence scores. Pair these with error taxonomy metrics that show categories of failures and their impact on business outcomes.

Auditable trails should be queryable and exportable in formats that compliance teams can archive. Include cryptographic integrity checks where appropriate to demonstrate that records have not been tampered with. For high risk domains, consider an append only ledger or verifiable logging mechanism. Make access controls explicit so that audit logs remain available to authorized parties without exposing sensitive data to unnecessary users.

Logging best practices

Standardize explanation payload schema for every agentic action.

Mask or tokenize sensitive data and store raw data separately under stricter controls.

Include human review annotations and outcomes to connect human decisions with agentic actions.

Automate periodic integrity checks and retention enforcement to comply with policies.

[image: Executive dashboard for agent decisions | prompt: A high fidelity illustration of an executive dashboard displaying agentic decisions, confidence bars, and audit trails, perspective top down, modern corporate office background, warm professional lighting, concise data panels with charts and timelines, clean vector illustration style, no text or logos]

Governance, policies, and approval criteria

Executives approve deployments when governance and clear policy mappings are in place. Start by defining ownership, roles, and escalation paths. Create a governance board with representation from business, security, compliance, and engineering. That board should set the acceptance criteria for explainable agentic AI and sign off on risk tolerances and data handling rules.

Acceptance criteria should be measurable. Define thresholds for operational metrics, human override rates, and acceptable error classes. Include mandatory checks such as bias testing, data quality gates, and privacy impact assessments. Ensure that any model updates pass a controlled release process that includes regression testing on a representative explainability benchmark.

Policy design should make explainability requirements explicit. For example, mandate that any autonomous decision that affects customers must emit a short rationalization suitable for customer support teams. For internal decisions, require traceability to policy rules and a mapping from decision outcomes to compliance controls. These policies then feed into your deployment checklist so that approvals are straightforward and repeatable.

Create a concise audit playbook that lists what artifacts auditors can request, the format of those artifacts, and the expected response time. Include example queries and prebuilt reports so auditors do not need to start from scratch. By reducing friction in audits, you build executive trust that governance is operational and not theoretical.

Pilots, human-in-the-loop, and staged rollouts

To get executive buy in quickly, structure pilots with clear milestones, short timeboxes, and observable criteria for success. Use human-in-the-loop patterns initially to constrain risk and provide oversight. Human reviewers should be presented with compact explanations that enable quick validation and intervention. Record every human override and use those records to refine agentic policies and thresholds.

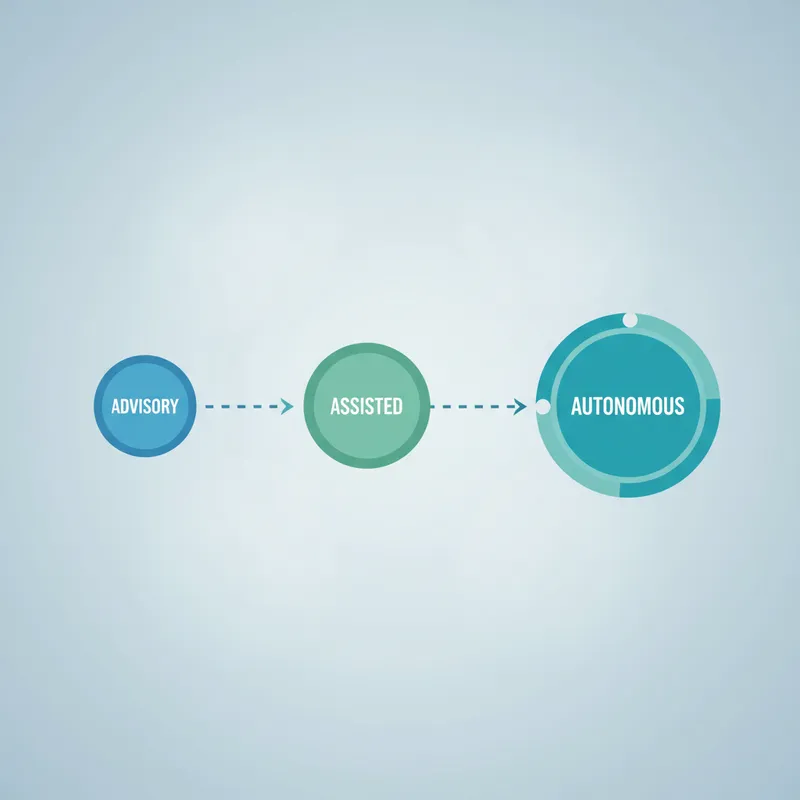

Design staged rollouts that increase autonomy as confidence grows. Stage one: advisory mode where the agent suggests actions and human operators accept or reject. Stage two: assisted mode where the agent executes low risk tasks autonomously under monitoring. Stage three: full autonomy in scoped domains with periodic audits. Each stage must have quantitative exit criteria mapped to both business KPIs and explainability metrics.

Include A B testing and canary deployments to detect regressions in behavior and explanations. Track the correlation between agent explanations and human trust signals such as override frequency and satisfaction scores. Use these signals to tune explanation granularity, content, and delivery method to better match stakeholder needs.

Set an internal approval cadence. For example, require governance board review after each pilot stage and provide a concise packet including audit logs, explanation examples, metric dashboards, and incident summaries. This makes it possible for executives to approve scaled deployment this quarter with clear evidence rather than abstract promises.

Testing explainability: scenarios, audits, and continuous validation

Testing explainability is about more than unit tests. It requires scenario based validation, adversarial probes, and periodic audits that exercise the boundaries of agent behavior. Create a catalog of scenarios that represent normal operations, edge cases, and adversarial inputs. Each scenario should have expected explanation artifacts and success criteria.

Conduct red team exercises that probe the agent for opaque behavior and try to produce misleading or harmful explanations. Use these exercises to harden explanation generation and to refine human fallback strategies. Include privacy testers who attempt to extract sensitive data through chained interactions. All findings should be recorded and prioritized for remediation.

Automate continuous validation by deploying explainability unit tests as part of your CI pipeline. For example, tests can assert that explanation payloads contain required fields, that confidence outputs are within expected distributions for representative inputs, and that provenance links are intact. When tests fail, route alerts to both engineering and governance teams for triage.

Schedule regular audits that review a randomized sampling of decisions and their explanations. Audits should evaluate explanation fidelity, alignment with policy, and business impact. For executive stakeholders, produce summary reports that highlight trends rather than raw logs. These reports should include actionable items and recommended remediations.

Engineering patterns for explainable agentic AI

Engineering explainable agentic AI requires practical patterns that teams can implement quickly. Adopt a layered architecture where the agent orchestration layer is distinct from skill implementations and from the explanation generation layer. This modularity simplifies testing and allows explanation strategies to evolve independently of core logic.

Implement an explanation API contract that every skill must implement. The contract should define the minimal explanation payload, which includes a summary rationale, contributing features or inputs, confidence score, alternatives considered, and provenance metadata. This makes it straightforward to assemble composite explanations when multiple skills are invoked for a single decision.

Where possible, use models and algorithms that provide local interpretable explanations. For deep models, use surrogate models for explanations of specific decisions and ensure that surrogate fidelity is measured and recorded. When natural language explanations are generated, include structured backing data that ties sentences to concrete inputs and intermediate outputs so that claims can be verified.

Keep a centralized registry of models, prompts, and configuration artifacts. The registry should expose metadata for audits, including owners, validation reports, and approved use cases. Integrate the registry with your deployment pipeline so that deployments cannot occur without passing governance gates. This reduces the possibility of unauthorized model updates creating unexplained behaviors in production.

Communicating value and risk to leadership

Executive acceptance depends on clear, concise communication about value, risk, and controls. Prepare a one page executive brief that answers: what the agent does, the business impact, the measurable controls in place, the explainability features, and the audit schedule. Use visuals like trend lines showing reduction in manual time, error rates, and confidence improvements over pilot stages.

When presenting explainable agentic AI to leadership, use concrete examples. Walk through a typical decision with the explanation artifacts that will be available in production. Show the provenance trail and how an auditor can reconstruct the decision in minutes. Demonstrate human-in-the-loop interfaces and how overrides are captured and analyzed.

Quantify residual risk and present mitigation plans. Leadership will accept some level of residual risk if there are clear contingencies, rollback plans, and monitoring alerts. Provide a risk heatmap that maps types of failures to business impact and show the controls in place for each cell of the map. This approach builds trust because it acknowledges unknowns and documents how they are managed.

Finally, align success metrics with executive priorities. If leadership cares about revenue velocity, show ROI estimates and timelines to break even. If the priority is compliance, highlight auditability metrics and retention controls. Tailor the narrative so explainable agentic AI is framed as a business enabler rather than a purely technical initiative.

Operational checklist for executive approval this quarter

Use the checklist below to prepare a deployment packet that executives can review quickly. Each item maps to a deliverable that demonstrates explainability, auditability, and controlled risk.

Scope and business case: Clear description of scope, target impact, and KPIs.

Governance signoff: Documentation of governance board approval and assigned owners.

Explainability artifacts: Example explanations at executive and technical depth, including alternatives and confidence scores.

Provenance and logging: Exportable logs, data lineage records, and model version history for a representative sample.

Metrics and thresholds: Acceptance thresholds for success rate, human override rate, and confidence distribution.

Security and privacy: Data handling policy, masking/tokenization strategy, and retention schedule.

Testing reports: Results from scenario testing, red team findings, and remediation plans.

Rollback and incident plan: Clear steps for pause, rollback, forensic analysis, and communication.

Audit playbook: List of artifacts auditors can request and expected response times.

When these checklist items are complete, package them into a deployment packet and request a governance board review within the quarter. Pair the packet with a live demo that walks through explanation artifacts and a dashboard that highlights key metrics. Live demos convert abstract claims into tangible evidence and make it easier for executives to approve scaled deployment this quarter.

Conclusion

Explainable agentic AI is achievable when teams combine engineering discipline, governance, and clear executive communications. Leaders will approve scaled deployments if they see measurable controls, reproducible audit trails, and concise explanations that map to business impact. The path to approval begins with modular design, standardized explanation contracts, structured logging, and staged rollouts that increase autonomy only as explainability and safety metrics reach agreed thresholds.

Start by aligning the governance board and defining acceptance criteria that map to both business KPIs and explainability metrics. Instrument the system to emit structured explanation payloads, capture provenance, and log alternatives with scores. Implement human-in-the-loop patterns for initial stages and record overrides to refine policies. Build a deployment packet that includes example explanations, exportable logs, testing reports, and a rollback plan. Use automated tests and regular audits to maintain fidelity, and schedule periodic summaries for leadership that focus on trends and actionable remediations.

Operational readiness depends on making explanations meaningful for different audiences. Provide short rationales for executives, detailed traces for engineers, and policy mappings for compliance teams. This multi layer approach ensures that every stakeholder can interrogate and trust the system at the level they need. By adopting these practices you will reduce approval friction, accelerate pilots into production, and create an auditable, repeatable framework for agentic systems across the organization.

Finally, prioritize communication. A live demo that shows an end to end decision, the supporting explanation, and the audit artifacts will often unlock executive approval faster than pages of technical documentation. Be transparent about residual risk and present clear mitigation strategies. When leadership can see that explainable agentic AI is not only performant but also accountable, they are far more likely to approve scaled deployments within the quarter.